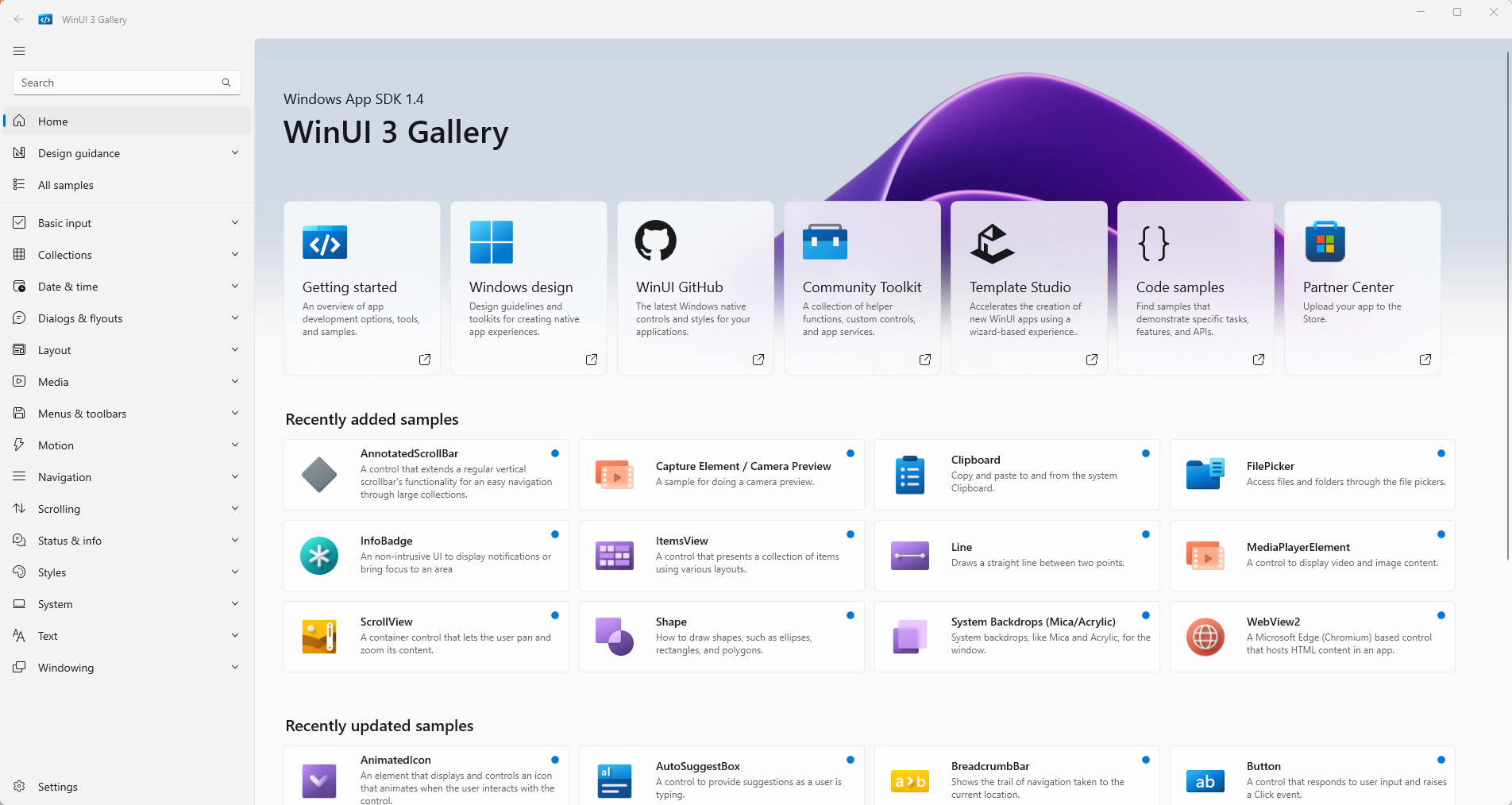

This edition of the DDD Europe was a kind of a restart at a new venue (and city) and with a new format: three conferences in three days. The complementary conferences (Event Centric and Data Mesh) felt like the natural step for a DDD conference, and I was excited to go to some of the sessions, but I failed. I did not have enough bandwidth for that.

I don’t know about you, but during such conferences, my brain is continuously receiving inputs and weaves them together so that it takes some time to have a “final” summary of the all the new things I learned, tools I saw, ideas I got corrected or thrown away as a result of the talks and the floor-conversations I had the opportunity to enjoy. What follows is my “braindump” shared with my colleagues after a few days as an answer to Nick Tune‘s question What are your biggest takeaways from DDD EU. What are the things we should start thinking about and learning now?

Gregor Hohpe‘s description of a platform (or what is not a platform)

The idea of building software that can do everything and requires only configuration is not valid for platforms; we need to forget that. A platform is not a framework, but an enabler.

Nobody can anticipate every use case. Platforms should not try to do that.

Nobody can anticipate every use case. Platforms should not try to do that.

If you haven’t heard about any case when the teams used your platform in an anexpected way, you didn’t build a platform.

Gregor Hohpe – Platform Engineering is Domain-Driven Design

Platforms are like bearings: they must ensure that the “machine” runs smoothly without any intervention. (This is a key requirement of a bearing: one must not know about its existence during “runtime”.)

No requests for help to release, grant access, or assign permissions. Self-service is the key principle.

No requests for help to release, grant access, or assign permissions. Self-service is the key principle.

Before I became a software person, I studied mechanical engineering, specialised in trains. It is also not a surprise that Gregor’s advice regarding guardrails hit home.

Railways keep us on track. They ensure that we don’t derail. They are enabling constraints which help to travel fast and straight. Guardrails have a different job. They can’t tell us how to travel straight forward. They can’t stop us from going in circles and hitting them again and again.

The talk was not new, but I had never seen it before, so it was new to me. It contains a lot of other dos and don’ts too, so it is worth repeating until everyone building a platform “gets the message”. Here is a version from 2024:

Cyrille Martraire’s advice to be unconventional when modelling

Who should present a talk called ‘Breaking Conventions for More Performant Models’ if not Cyrille Martraire, a very unconventional person himself? Afterwards, Cyrille called this talk “basic, common sense”. I would say, yes, it is, after you’ve made enough mistakes to improve your common sense. It was an excellent talk on how to avoid those mistakes.

I think that we all agree with this definition of what modelling means. The difference starts two minutes afterwards  . How much to simplify??

. How much to simplify??

When talking about time in the hotel business, how many concepts of time do you have? And what kind of “time” models are those?

My take is that the time concept for a guest and the time concept of the cleaning personnel should not have much in common.

The tips of Cyrille didn’t stop here. Finding the right model is challenging because, if chosen well, it will not only solve a problem but also lead to new ideas.

“Indexing” is a technical term. But wouldn’t you, as a user of a service for managing appointments, consider a view about “very soon, soon, later” much more helpful than an accurate but very noisy hour-by-hour list? The need to balance reports can lead to an improved user experience.

Alberto Brandolini‘s “fast-forward” towards a multi-model world

I always enjoy Alberto’s talks, and this one wasn’t an exception. The clarity, the necessary portion of irony and the conclusion at the end speak for themselves.

What is a Bounded Context?

What is a Bounded Context?

What happens if your product goes multi-country? Which characteristics remain as clear as described on the slide? Well … it depends.

This is the usual way, and it is a recipe for disaster.

But it does not need to be.

The final advice for everyone working on a long-lasting product: Maps & disciplined thinking, because words are just not enough.

Apropos, product development:

The rollercoaster called product development, or how Xin Yao and John Cutler call it: The Beautiful Mess

What a combo! Xin, a software architect and DDD change agent, and John, a product expert with extraordinary visualisation skills, have shared the stage for an hour to show us the differences and the synergies of this industry in which we still talk about “the two sides”.

I can’t distil this talk because each slide is a gem, and each explanation should be heard from the speakers. I can share my favourite slide, though, because it shows my day-to-day work as a single picture.

Additionally …

… I brought back some old and new tips from these talks.

The tool: https://tryitwithann.com/ A tool for rapid prototyping AND automatic specification generation (as unit tests) for event-based systems, based on miro.

The talks which made our brains go in circles:

Barry O’Reilly’s Residuality Theory. Not new, but still a provocative and must-heard-about concept.

Dr. Jabe Bloom’s university-level talk ‘Through the Looking Glass: Applying Architecture Principles to Social Systems’ began with the history and analysis of Conway’s Law and concluded with the definition of socio-technical architecture.

This experience report feels probably unstructured, confusing, “a lot!”, and I won’t disagree. It is always “a lot” because we live in complex times and address complicated problems. However, learning about these things helps to stay in control as much as possible, and accepting the lack of control when necessary.

I will call it just like my friend Xin does: The DDD magic.

Xin Yao and John Cutler – Modelling Stories of the Beautiful Mess – DDD Europe 2025

Xin Yao and John Cutler – Modelling Stories of the Beautiful Mess – DDD Europe 2025

Edition 4 of IEC 61131-3 has been available online since May 2025. In the following post, I will briefly explain the most important differences between Edition 3 and Edition 4.

I was unable to test the new features of Edition 4 as I did not have a development environment that supported the new functions of Edition 4 at the time of the comparison. I can therefore only offer a general overview of the differences. I used IEC 61131-3 (Edition 3.0) 2013-02 and IEC 61131-3 (Edition 4.0) 2025-05 for the comparison. However, if you want or need to familiarise yourself more intensively with IEC 61131-3, there is no getting around the standard.

The first impression

When comparing the tables of contents, it is noticeable that in Edition 3 the appendix begins on page 221, while in Edition 4 the appendix begins on page 241.

A new addition is the 6-page chapter 6.9 Synchronisation of concurrent execution. The corresponding sub-chapters reveal that this is about mutexes and semaphores.

Chapter 7.2 Instruction List (IL) is no longer included in Edition 4. This means that IL is no longer part of IEC 61131-3, but this does not necessarily mean that IL is no longer offered by manufacturers. Each manufacturer can continue to decide for themselves whether they want to offer IL in their development environment or not.

Octal literals

In addition to the integer literals (e.g. -43), the floating point literals (e.g. -43.8), the binary literals (e.g. 2#1101_0110) and the hexadecimal literals (e.g. 16#E26B), there are also octal literals (e.g. 8#267) in Edition 3. The octal literals are no longer supported by Edition 4. In Edition 3, the octal literals were already labelled as deprecated.

USTRING and UCHAR data types

With the two new data types USTRING and UCHAR, character strings are now also supported in which each character is encoded according to UTF-8.

While a WSTRING / WCHAR is encoded as UTF-16 and always requires 2 bytes per character, UTF-8 encodes each character with a sequence of 1 to 4 bytes.

UTF-8, or Unicode Transformation Format – 8 bit, is backwards compatible with 7-bit ASCII, as the first 128 characters in UTF-8 and ASCII are identical in the English character set. This means that every valid ASCII character string is also a valid UTF-8 character string, so that most programmes written for ASCII can be easily adapted to UTF-8.

UTF-8 is particularly widespread in Internet applications. Both the Internet Mail Consortium (IMC) and the Word Wide Web Consortium (W3C) recommend the use of UTF-8 as a standardised encoding.

USTRING and UCHAR literals are specified as follows:

x : USTRING := USTRING#'ABC';

x : USTRING := U#'ABC';

y : UCHAR := UCHAR#'A';

y : UCHAR := U#'A';

LEN_MAX and LEN_CODE_UNIT

The character string functions are described in chapter 6.6.2.5.12. The functions LEN_MAX and LEN_CODE_UNIT are new additions.

LEN_MAX gibt die maximale Länge des Strings zurück:

VAR

x : STRING[100] := 'Hello';

y : WSTRING[100] := WSTRING#'Hello';

z : USTRING[100] := USTRING#'Hello';

END_VAR

a := LEN_MAX(x); // a = 100

b := LEN_MAX(y); // b = 100

c := LEN_MAX(z); // c = 100

LEN_CODE_UNIT returns the number of code units occupied by the string. For the data types STRING and WSTRING, LEN_CODE_UNIT returns the same value as for LEN. With USTRING, however, the value of LEN_CODE_UNIT can be greater than that of LEN.

a := LEN_CODE_UNIT(x); // a = 5

b := LEN_CODE_UNIT(y); // b = 5

c := LEN_CODE_UNIT(z); // c = 5

As the strings in this example only contain ASCII characters, LEN_CODE_UNIT returns the same value for all three data types.

Specification of characters by character code

In a string or character literal, any character can be specified by the character code. This is specified in hexadecimal notation after a $ in curly brackets:

VAR

x : STRING := '${9}'; // Control character for tabulator

y : WSTRING := WSTRING#'${2211}'; // Character Σ

z : USTRING := USTRING#'${1F579}'; // Emoji ‚Joystick’

END_VAR

The previous support of character codes with fixed lengths (e.g. ‘$0A’ or “$00C4”) is still supported.

Note: For the representation of the character code, I would have liked the usual coding for the respective number system to be used, i.e. 16#1F579 instead of just 1F579. Even if it is usual to specify character codes in hexadecimal, it is easier to recognise in which number system the character code was specified.

Unfortunately, the Char Map under Windows only allows the entry of character codes with a maximum of 4 digits. The function Insert -> Symbol in Word is much more helpful in finding the right character code:

The string and character literals are described in chapter 6.3.3 Character string literals.

Data type conversion

Figure 11 in chapter 6.6.1.6 Data type conversion provides an overview of the implicit and explicit data type conversions. Compared to Edition 3, there are some adjustments and extensions here.

Edition 4 defines some explicit data type conversions that are not included in Edition 3:

- LWORD_TO_BOOL, DWORD_TO_BOOL, WORD_TO_BOOL and BYTE_TO_BOOL

- UINT_TO_WCHAR and USINT_TO_CHAR

- WSTRING_TO_WCHAR

- WCHAR_TO_UINT

Some explicit data type conversions have been changed to implicit data type conversions:

- STRING_TO_WSTRING

- CHAR_TO_WCHAR

Some implicit and explicit data type conversions have been added for the USTRING and UCHAR data types:

- UDINT_TO_UCHAR and DWORD_TO_UCHAR

- USTRING_TO_WSTRING, USTRING_TO_STRING and USTRING_TO_UCHAR

- UCHAR_TO_UDINT, UCHAR_TO_LWORD, UCHAR_TO_DWORD, UCHAR_TO_USTRING, UCHAR_TO_WCHAR and UCHAR_TO_CHAR

- WSTRING_TO_USTRING and STRING_TO_USTRING

- CHAR_TO_UCHAR

Data type conversion between strings and ARRAY OF BYTE

The functions for data type conversion between strings and ARRAY OF BYTE are new additions. These are described in chapter 6.6.2.5.8 Data type conversion between string types and array of bytes:

- STRING_TO_ARRAY_OF_BYTE, WSTRING_TO_ARRAY_OF_BYTE and USTRING_TO_ARRAY_OF_BYTE

- ARRAY_OF_BYTE_TO_STRING, ARRAY_OF_BYTE_TO_WSTRING and ARRAY_OF_BYTE_TO_USTRING

TRUNC was removed

The TRUNC function is defined as a typed and overloaded function. In the typed notation, the source data type and the target data type are specified in the function name, e.g. LREAL_TRUNC_DINT(x), while in the overloaded notation, only the target data type is specified, e.g. TRUNC_DINT(x). The source data type is determined from the transferred parameter.

Edition 3 still had the notation without specifying a data type definiert, i.e. TRUNC(x). Here the source data type always had to be ANY_REAL and the target data type always ANY_INT. This notation was already labelled as deprecated in the edition 3 and has now been removed from edition 4.

Properties

Edition 4 (finally) defines properties. Properties are part of a CLASS, FUNCTION_BLOCK or INTERFACE. Setter and getter methods are declared so that the value of a property can be set or read. The declaration is similar to that of a method. However, the keywords PROPERTY_GET and PROPERTY_SET are used instead of the METHOD keyword.

PROPERTY_GET PUBLIC nFoo : INT

;

END_PROPERTY

PROPERTY_SET PUBLIC nFoo : INT

;

END_PROPERTY

If setter and getter methods are defined, the data type must be the same for both methods.

The same access specifiers can be used for properties as for methods, i.e. INTERNAL, PRIVATE, PROTECTED and PUBLIC. The keywords ABSTRACT and FINAL are also possible. If no access specifier is specified, the property is PROTECTED.

If a property is only to be readable, the setter method can be omitted or set to PRIVATE.

A property can be used like an instance variable:

VAR

fbFoo : FB_Foo;

x : INT := 10;

END_VAR

fbFoo.nFoo := x; // Write to the property

x := fbFoo.nFoo; // Read from the property

Program code is stored in the setter and getter methods to manage the value of the property internally.

FUNCTION_BLOCK FB_Foo

VAR PRIVATE

_nFoo : INT; // Backing variable

END_VAR

PROPERTY_GET PUBLIC nFoo : INT

nFoo := _nFoo;

END_PROPERTY

PROPERTY_SET PUBLIC nFoo : INT

_nFoo := nFoo;

END_PROPERTY

END_FUNCTION_BLOCK

The properties are described in chapter 6.6.5.11 Properties.

Assertions

In chapter 6.6.2.5.17 Assertions, Edition 4 defines the ASSERT function, which has the input parameter IN of type BOOL and no return value. ASSERT is used to check the validity of expressions or variables during development.

If IN is TRUE when called, the function has no effect. However, if IN is FALSE, ASSERT should ‘inform’ the developer of this. Each manufacturer (of the development environment) can individually define what the message looks like.

Once development is complete, calling ASSERT no longer has any effect, regardless of the value of the input parameter IN. How this is communicated to the development environment can be determined by the manufacturer.

Synchronization of concurrent execution

Chapter 6.9 Synchronization of concurrent execution has been added in Edition 4 and defines objects and functions to synchronize program code that has been split into several tasks. Synchronization is required, for example, if two parallel programs access a common variable, whereby one program also changes this variable.

Mutexes and Semaphores are defined in Edition 4 to make it easier to synchronize programs running in parallel.

I will only briefly introduce the functions that define Edition 4. A detailed description of these concepts would be too extensive at this point, but could be the subject of a separate post.

Mutex

Mutexes are usually used to model critical sections. A mutex can be locked and unlocked.

Both an object-oriented approach and an approach based on functions are defined. The object-oriented approach is based on the MUTEX function block. This has the following methods:

MUTEX also has the properties LOCK_COUNT and OWNER. OWNER is used to store a value that identifies the task.

In the approach based on functions, the corresponding functions are available:

- MUTEX_UNLOCK

- MUTEX_LOCK

- MUTEX_TRYLOCK

Semaphore

Here too, both an object-oriented approach and an approach based on functions are defined. The SEMA function block was defined for the object-oriented approach. This has the methods:

- RELEASE

- ACQUIRE

- TRY_ACQUIRE

The following functions have been defined accordingly:

- SEMA_RELEASE

- SEMA_ACQUIRE

- SEMA_TRY_ACQUIRE

Support of BCD numbers discontinued

In future, BCD numbers will no longer be supported. In Edition 4, the following functions are marked as deprecated:

- IS_VALID_BCD

- BCD_TO_out and in_BCD_TO_out

- TO_BCD_out and in_TO_BCD_out

Conclusion

My personal highlight is the support of mutexes and semaphores. In times when CPUs have more and more cores, appropriate tools must also be provided to distribute a program across several tasks.

I would describe the remaining changes as general care and maintenance. It’s good that things are also removed from the standard if they are no longer relevant. This includes, for example, the removal of IL as and the omission of octal literals.

Some points have also been defined in more detail, for example the question of whether ARRAY[1..1] and ARRAY[1..0] are permitted and how they behave (chapter 6.4.4.5.1).

Otherwise, I don’t see the differences as extensive as between Edition 2 (published in 2003) and Edition 3 (published in 2013).

However, I also miss some points. These include the implicit enumeration, optional parameters for methods and functions, as well as the option to overload methods.

Seit Mai 2025 ist die Edition 4 der IEC 61131-3 online verfügbar. Im folgenden Post gehe ich kurz auf die wichtigsten Unterschiede zwischen der Edition 3 und der Edition 4 ein.

Die Neuerungen der Edition 4 habe ich nicht testen können, da zum Zeitpunkt des Vergleichs mir keine Entwicklungsumgebung zur Verfügung stand, die die neuen Funktionen der Edition 4 unterstützt. Somit kann ich nur einen allgemeinen Überblick über die Unterschiede bieten. Für den Vergleich habe ich die IEC 61131-3 (Edition 3.0) 2013-02 und die IEC 61131-3 (Edition 4.0) 2025-05 verwendet. Wer sich intensiver mit der IEC 61131-3 auseinandersetzen will oder muss, kommt um die Norm allerdings nicht herum.

Der erste Eindruck

Bei dem Vergleich der Inhaltsverzeichnisse fällt auf, dass bei der Edition 3 der Anhang ab Seite 221 beginnt, während die Edition 4 ab Seite 241 den Anhang enthält.

Neu hinzugekommen ist das 6 Seiten umfassende Kapitel 6.9 Synchronization of concurrent execution. Die entsprechenden Unterkapitel verraten, dass es hier um Mutexe und Semaphoren geht.

Das Kapitel 7.2 Instruction List (IL) ist in der Edition 4 nicht mehr enthalten. Somit ist IL (zu Deutsch: AWL) nicht mehr Bestandteil der IEC 61131-3. Das muss aber nicht heißen, dass IL von den Herstellern nicht mehr angeboten wird. Jeder Hersteller kann weiterhin selbst entscheiden, ob er IL in seiner Entwicklungsumgebung anbieten möchte oder nicht.

Oktal-Literale

Neben den Ganzzahl-Literalen (z.B. -43), den Fließkomma-Literalen (z.B. -43.8), den Binar-Literalen (z.B. 2#1101_0110) und den Hexadezimal-Literalen (z.B. 16#E26B) gibt es in der Edition 3 auch die Oktal-Literal (z.B. 8#267). Die Oktal-Literale werden von Edition 4 nicht mehr unterstützt. In der Edition 3 waren die Oktal-Literale schon als deprecated gekennzeichnet.

Datentypen USTRING und UCHAR

Mit den beiden neuen Datentypen USTRING und UCHAR werden nun auch Zeichenketten unterstützt, bei denen jedes Zeichen nach UTF-8 kodiert ist.

Während ein WSTRING / WCHAR als UTF-16 kodiert wird und immer 2 Byte pro Zeichen benötigt, codiert UTF-8 jedes Zeichen mit einer Folge von 1 bis 4 Bytes.

UTF-8, oder Unicode Transformation Format – 8 Bit, ist abwärtskompatibel zu 7-Bit-ASCII, da die ersten 128 Zeichen in UTF-8 und ASCII im englischen Zeichensatz identisch sind. Somit ist jede gültige ASCII-Zeichenkette auch eine gültige UTF-8-Zeichenkette, sodass die meisten für ASCII geschriebenen Programme leicht an UTF-8 angepasst werden können.

UTF-8 ist besonders bei Internetanwendungen stark verbreiten. Sowohl das Internet-Mail Consortium (IMC) als auch das Word Wide Web Consortium (W3C) empfehlen die Verwendung von UTF-8 als einheitliche Codierung.

USTRING– und UCHAR-Literale werden wie folgt angegeben:

x : USTRING := USTRING#'ABC';

x : USTRING := U#'ABC';

y : UCHAR := UCHAR#'A';

y : UCHAR := U#'A';

LEN_MAX und LEN_CODE_UNIT

In Kapitel 6.6.2.5.12 werden die Character string functions beschrieben. Neu hinzugekommen sind die Funktionen LEN_MAX und LEN_CODE_UNIT.

LEN_MAX gibt die maximale Länge des Strings zurück:

VAR

x : STRING[100] := 'Hello';

y : WSTRING[100] := WSTRING#'Hello';

z : USTRING[100] := USTRING#'Hello';

END_VAR

a := LEN_MAX(x); // a = 100

b := LEN_MAX(y); // b = 100

c := LEN_MAX(z); // c = 100

LEN_CODE_UNIT gibt die Anzahl der von dem String belegten Code-Einheiten zurück. Bei den Datentypen STRING und WSTRING gibt LEN_CODE_UNIT den gleichen Wert zurück wie bei LEN. Bei USTRING kann der Wert von LEN_CODE_UNIT allerdings größer sein als von LEN.

a := LEN_CODE_UNIT(x); // a = 5

b := LEN_CODE_UNIT(y); // b = 5

c := LEN_CODE_UNIT(z); // c = 5

Da die Strings in diesem Beispiel nur ASCII-Zeichen enthalten, liefert LEN_CODE_UNIT für alle drei Datentypen denselben Wert zurück.

Angabe von Zeichen durch Zeichencodes

In einem String- oder Zeichen-Literal können beliebige Zeichen durch den Zeichencode angegeben werden. Dieser wird in Hexadezimaldarstellung nach einem $ in geschweifte Klammern angegeben:

VAR

x : STRING := '${9}'; // Steuerzeichen für Tabulator

y : WSTRING := WSTRING#'${2211}'; // Zeichen ∑

z : USTRING := USTRING#'${1F579}'; // Emoji 'Joystick'

END_VAR

Die bisherige Unterstützung von Zeichencodes mit festen Längen (z.B. ‘$0A’ bzw. “$00C4”) wird weiterhin unterstützt.

Anmerkung: Für die Darstellung des Zeichencodes hätte ich mir gewünscht, dass die übliche Codierung für das jeweilige Zahlensystem verwendet wird; also 16#1F579 statt nur 1F579. Auch wenn es üblich ist, Zeichencodes in hexadezimal anzugeben, so ist doch eindeutiger zu erkennen in welchem Zahlensystem der Zeichencode angegeben wurde.

Die Char Map (Zeichentabelle) unter Windows erlaubt leider nur die Eingabe von Zeichencodes mit max. 4 Ziffern. Die Funktion Einfügen -> Symbol in Word ist deutlich hilfreicher, den passenden Zeichencode zu finden:

Beschrieben werden die String- und Zeichen-Literale im Kapitel 6.3.3 Character string literals.

Datentypkonvertierung

In Bild 11 des Kapitels 6.6.1.6 Data type conversion ist eine Übersicht über die impliziten und expliziten Datentypkonvertierungen zu sehen. Gegenüber der Edition 3 gibt es hier einige Anpassungen und Erweiterungen.

Die Edition 4 definiert einige explizite Datentypkonvertierungen, die in der Edition 3 nicht enthalten sind:

- LWORD_TO_BOOL, DWORD_TO_BOOL, WORD_TO_BOOL und BYTE_TO_BOOL

- UINT_TO_WCHAR und USINT_TO_CHAR

- WSTRING_TO_WCHAR

- WCHAR_TO_UINT

Einige explizite Datentypkonvertierungen wurden in implizite Datentypkonvertierungen geändert:

- STRING_TO_WSTRING

- CHAR_TO_WCHAR

Für die Datentypen USTRING und UCHAR sind einige implizite und explizite Datentypkonvertierungen hinzugekommen:

- UDINT_TO_UCHAR und DWORD_TO_UCHAR

- USTRING_TO_WSTRING, USTRING_TO_STRING und USTRING_TO_UCHAR

- UCHAR_TO_UDINT, UCHAR_TO_LWORD, UCHAR_TO_DWORD, UCHAR_TO_USTRING, UCHAR_TO_WCHAR und UCHAR_TO_CHAR

- WSTRING_TO_USTRING und STRING_TO_USTRING

- CHAR_TO_UCHAR

Datentypkonvertierung zwischen Strings und ARRAY OF BYTE

Neu hinzugekommen sind Funktionen für die Datentypkonvertierung zwischen Zeichenketten und ARRAY OF BYTE. Beschrieben werden diese im Kapitel 6.6.2.5.8 Data type conversion between string types and array of bytes:

- STRING_TO_ARRAY_OF_BYTE, WSTRING_TO_ARRAY_OF_BYTE und USTRING_TO_ARRAY_OF_BYTE

- ARRAY_OF_BYTE_TO_STRING, ARRAY_OF_BYTE_TO_WSTRING und ARRAY_OF_BYTE_TO_USTRING

TRUNC entfällt

Die Funktion TRUNC wird als typisierte und überladene Funktion definiert. In der typisierten Schreibweise wird der Quelldatentyp und der Zieldatentyp im Funktionsnamen angegeben, z.B. LREAL_TRUNC_DINT(x), während bei der überladenen Schreibweise nur der Zieldatentyp angegeben wird, z.B. TRUNC_DINT(x). Der Quelldatentyp wird aus dem übergebenen Parameter ermittelt.

Die Edition 3 hatte noch die Schreibweise ohne die Angabe eines Datentyps definiert, also TRUNC(x). Hierbei musste der Quelldatentyp immer ANY_REAL und der Zieldatentyp immer ANY_INT sein. Diese Schreibeweise war in der Edition 3 schon als Deprecated gekennzeichnet und ist jetzt aus der Edition 4 entfernt worden.

Eigenschaften

Mit der Edition 4 werden (endlich) Eigenschaften definiert. Eigenschaften sind Teil einer CLASS, FUNCTION_BLOCK oder INTERFACE. Damit der Wert einer Eigenschaft gesetzt oder gelesen werden kann, werden Setter- und Getter-Methoden deklariert. Die Deklaration ähnelnd der einer Methode. Allerdings wird statt dem Schlüsselwort METHOD die Schlüsselwörter PROPERTY_GET und PROPERTY_SET verwendet.

PROPERTY_GET PUBLIC nFoo : INT

;

END_PROPERTY

PROPERTY_SET PUBLIC nFoo : INT

;

END_PROPERTY

Sind Setter- als auch Getter-Methode definiert, so muss der Datentyp bei beiden Methoden gleich sein.

Für Eigenschaften können die gleichen Zugriffsbezeichner (Access Specifier) wie für Methoden verwendet werden, also INTERNAL, PRIVATE, PROTECTED und PUBLIC. Zusätzlich sind noch die Schlüsselwörter ABSTRACT und FINAL möglich. Wird kein Zugriffsbezeichner angegeben, so ist die Eigenschaft PROTECTED.

Soll eine Eigenschaft nur lesbar sein, so kann auf die Setter-Methode verzichtet werden oder diese wird auf PRIVATE gesetzt.

Eine Eigenschaft kann, wie eine Instanzvariable verwendet werden:

VAR

fbFoo : FB_Foo;

x : INT := 10;

END_VAR

fbFoo.nFoo := x; // Schreiben der Eigenschaft

x := fbFoo.nFoo; // Lesen der Eigenschaft

In der Setter- und Getter-Methode wird der Programmcode hinterlegt, um den Wert der Eigenschaft intern zu verwalten.

FUNCTION_BLOCK FB_Foo

VAR PRIVATE

_nFoo : INT; // Backing variable

END_VAR

PROPERTY_GET PUBLIC nFoo : INT

nFoo := _nFoo;

END_PROPERTY

PROPERTY_SET PUBLIC nFoo : INT

_nFoo := nFoo;

END_PROPERTY

END_FUNCTION_BLOCK

Beschrieben werden die Eigenschaften im Kapitel 6.6.5.11 Properties.

Assertions

Die Edition 4 definiert im Kapitel 6.6.2.5.17 Assertions die Funktion ASSERT, die den Eingangsparameter IN vom Typ BOOL und keinen Rückgabewert besitzt. ASSERT soll dazu dienen die Gültigkeit von Ausdrücken oder Variablen während der Entwicklung zu überprüfen.

Ist IN bei dem Aufruf TRUE, so hat die Funktion keine Auswirkungen. Ist IN aber FALSE, so soll ASSERT dem Entwickler dieses ‚mitteilen‘. Wie die Mitteilung aussieht, kann jeder Hersteller (der Entwicklungsumgebung) individuell definieren.

Ist die Entwicklung abgeschlossen hat der Aufruf von ASSERT keine Auswirkungen mehr, unabhängig welchen Wert der Eingangsparameter IN besitzt. Wie der Entwicklungsumgebung dieses mitgeteilt wird, kann vom Hersteller festgelegt werden.

Synchronisierung paralleler Programmausführung

Das Kapitel 6.9 Synchronization of concurrent execution ist in der Edition 4 neu hinzugekommen und definiert Objekte und Funktionen um Programmcode, der auf mehrere Tasks aufgeteilt wurde, zu synchronisieren. Eine Synchronisation ist beispielsweise dann erforderlich, wenn z.B. zwei parallele Programme auf eine gemeinsame Variable zugreifen, wobei ein Programm diese Variable auch verändert.

Damit parallellaufende Programme einfacher synchronisierbar sind, werden in der Edition 4 Mutexe und Semaphoren definiert.

Ich werde nur kurz die Funktionen vorstellen, die die Edition 4 definiert. Eine genaue Beschreibung dieser Konzepte wäre an dieser Stelle zu umfangreich, könnte aber Thema eines eigenen Post werden.

Mutex

Mutexe werden üblicherweise zur Modellierung kritischer Abschnitte verwendet. Ein Mutex kann gesperrt und entsperrt werden.

Es wird sowohl ein objektorientierter Ansatz definiert als auch ein Ansatz, der auf Funktionen basiert. Bei dem objektorientierter Ansatz ist die Basis der Funktionsblock MUTEX. Dieser besitzt die folgenden Methoden:

Zusätzlich besitzt MUTEX noch die Eigenschaften LOCK_COUNT und OWNER. OWNER dient dazu einen Wert zu speichern, der die Task identifiziert.

Bei dem Ansatz auf Basis von Funktionen stehen die entsprechenden Funktionen bereit:

- MUTEX_UNLOCK

- MUTEX_LOCK

- MUTEX_TRYLOCK

Semaphore

Auch hier wird sowohl ein objektorientierter Ansatz als auch ein Ansatz, der auf Funktionen basiert definiert. Für den objektorientierten Ansatz wurde der Funktionsblock SEMA definiert. Dieser besitzt die Methoden:

- RELEASE

- ACQUIRE

- TRY_ACQUIRE

Entsprechend wurden auch die folgenden Funktionen festgelegt:

- SEMA_RELEASE

- SEMA_ACQUIRE

- SEMA_TRY_ACQUIRE

Unterstützung BCD-Zahlen ist abgekündigt

In Zukunft werden BCD-Zahlen nicht weiter unterstützt. In der Edition 4 sind die folgenden Funktionen als Deprecated gekennzeichnet:

- IS_VALID_BCD

- BCD_TO_out und in_BCD_TO_out

- TO_BCD_out und in_TO_BCD_out

Fazit

Mein persönliches Highlight ist die Unterstützung von Mutexe und Semaphoren. In Zeiten, wo die CPUs immer mehr Kerne besitzen, müssen auch entsprechende Hilfsmittel bereitgestellt werden, um ein Programm auf mehrere Tasks zu verteilen.

Die restlichen Änderungen würde ich als allgemeine Pflege und Wartung bezeichnen. Gut, das hierbei auch Sachen aus der Norm entfernt werden, wenn diese keine Bedeutung mehr haben. Dazu gehört z.B. das Entfernen von IL als auch der Wegfall der Oktal-Literale.

Es wurden auch einige Punkte genauer definiert, beispielsweise die Frage, ob ARRAY[1..1] und ARRAY[1..0] erlaubt sind und wie sie sich verhalten (Kapitel 6.4.4.5.1).

Ansonsten sehe ich die Unterschiede nicht so umfangreich, wie zwischen Edition 2 (erschienen 2003) und Edition 3 (erschienen 2013).

Allerdings vermisse ich auch einige Punkte. Dazu gehören die lokalen Enums (implicit enumeration), optionale Parameter bei Methoden und Funktionen, als auch die Möglichkeit Methoden zu Überladen.

Im letzten Beitrag zu GreatBlogs ging es um die Resourcen-Optimierung der bereits bestehenden Software. Nachdem ein erster Nutzen des Projektes vorhanden ist, geht es ans Eingemachte. Um das eigentliche Suchthema. Dazu braucht es mehrere Dinge, eines davon ist ein Webcrawler, der nach Inhalten sucht.

Grundlegende Idee

Die Idee des Webcrawler ist, von einem Startpunkt ausgehend, neue Seiten bzw. Aktualisierungen zu finden. Diese wandern in einen Index und werden in weiterer Folge von einem anderen Prozess thematisch kategorisiert und mit einer Relevanz versehen.

Erste Iterationen und Learnings

Theoretisch ist ein Webcrawler eine einfache Sache. Aber wie immer in der Softwareentwicklung trifft Theorie auf Praxis und es gibt Themen, die unerwartet sind, aber auch solche, die bewusst auf später verschoben werden.

Ich wählte einen iterativen Ansatz mit dem Ziel, einen einfachen Crawler funktional zu haben und diesen in weiterer Folge kontinuierlich zu optimieren und um neue Features anzureichern.

Webcrawler v0.1.0

Wie auch das bisherige Backend, ist der Webcrawler mit Go geschrieben. Er gibt sich als gbbot zu erkennen. Ausgehend von einer Startdomain wird diese durchsucht und sowohl die einzelnen Seiten dieser Domain, als auch weitere Domains persistiert.

Im nächsten Schritt werden die neu gefundenen Domains durchsucht – nach demselben Verfahren.

Mit net/http und net/url bring Go alles mit, was man braucht.

Webcrawler v0.2.0

Bereits nach dem dritten Durchlauf gab es eine Unmenge an Domains, die natürlich mit dem Thema Blogs nichts mehr zu tun hat. Im Grunde finden sich alle Social Media-Plattformen und großen Tech-Buden in der Liste. Es muss also doch eine manuelle Freischaltung her.

Es werden zwar weiterhin alle gefundenen Domains gespeichert, allerdings müssen die zu crawlenden Domains manuell aktiviert werden. Das ist zwar aktuell Handarbeit, aber ohne eindeutige Erkennung, dass es sich hierbei um ein Blog handelt, gibt es keine andere Möglichkeit.

Der bisherige Nachteil: Das Parsen und Verfolgen ist nicht ganz billig.

Webcrawler v0.3.0

Um den gesamten Parsing-Prozess zu verbessern, wird nun zu Beginn überprüft, ob es eine sitemap.xml gibt. Wenn ja, wird diese herangezogen. Sitemap-Index-Dateien werden genauso unterstützt, wie Standard-Sitemap-Dateien.

In diesem Fall werden nur diese Seiten evaluiert und lediglich neue gefundene Domains mitgeschrieben, aber keinen weiteren Links gefolgt.

Das ist in Summe wesentlich performanter bzw. speichereffizienter, als sich alle gecrawlten Seiten zu merken und beim Durchsuchen des Trees zu prüfen, ob der gefundene Link bereits überprüft wurde.

Für die Arbeit mit den Sitemap-Dateien setze ich auf gopher-parse-sitemap. Die Lib ist schon älter, aber die Abhängigkeiten sind überschaubar und am Sitemap-Standard hat sich nichts weiter geändert, also kein Problem.

Learnings

Defensives Programmieren und so, ihr kennt das.

Aber das, was der Crawler da so findet, ist teilweise schon sehr grenzwertig. Auf jeden Fall muss er mit zahlreichen “Edge-Cases” umgehen können:

- Veraltete Links in Form von HTTP statt HTTPS

- Client-Links

- Malformed Links

- Dead-Links

- Nicht mehr existierende Domains

Und da gibt es noch weitere Fälle. Manchmal würde ich tatsächlich gerne einige Domaininhaber*innen anschreiben und ihnen einen Liste von unsinnigen Links mitteilen – aber das ist eine völlig andere Geschichte.

Weitere Verbesserungen

Natürlich gibt es noch viel zu tun, denn der Webcrawler ist bei weitem noch nicht optimal. Das sind die Themen, die als nächstes anstehen.

- Berücksichtigung robots.txt

- Einschränkung Sprache (vorerst Deutsch)

- Berücksichtigung /slashes

Danach wird es in Richtung “Themen-Erkennung” der Beiträge gehen.

Bei Interesse also bitte gerne bei GreatBlogs vorbeischauen.

Der Beitrag GreatBlogs – Ein Webcrawler entsteht erschien zuerst auf Norbert Eder.

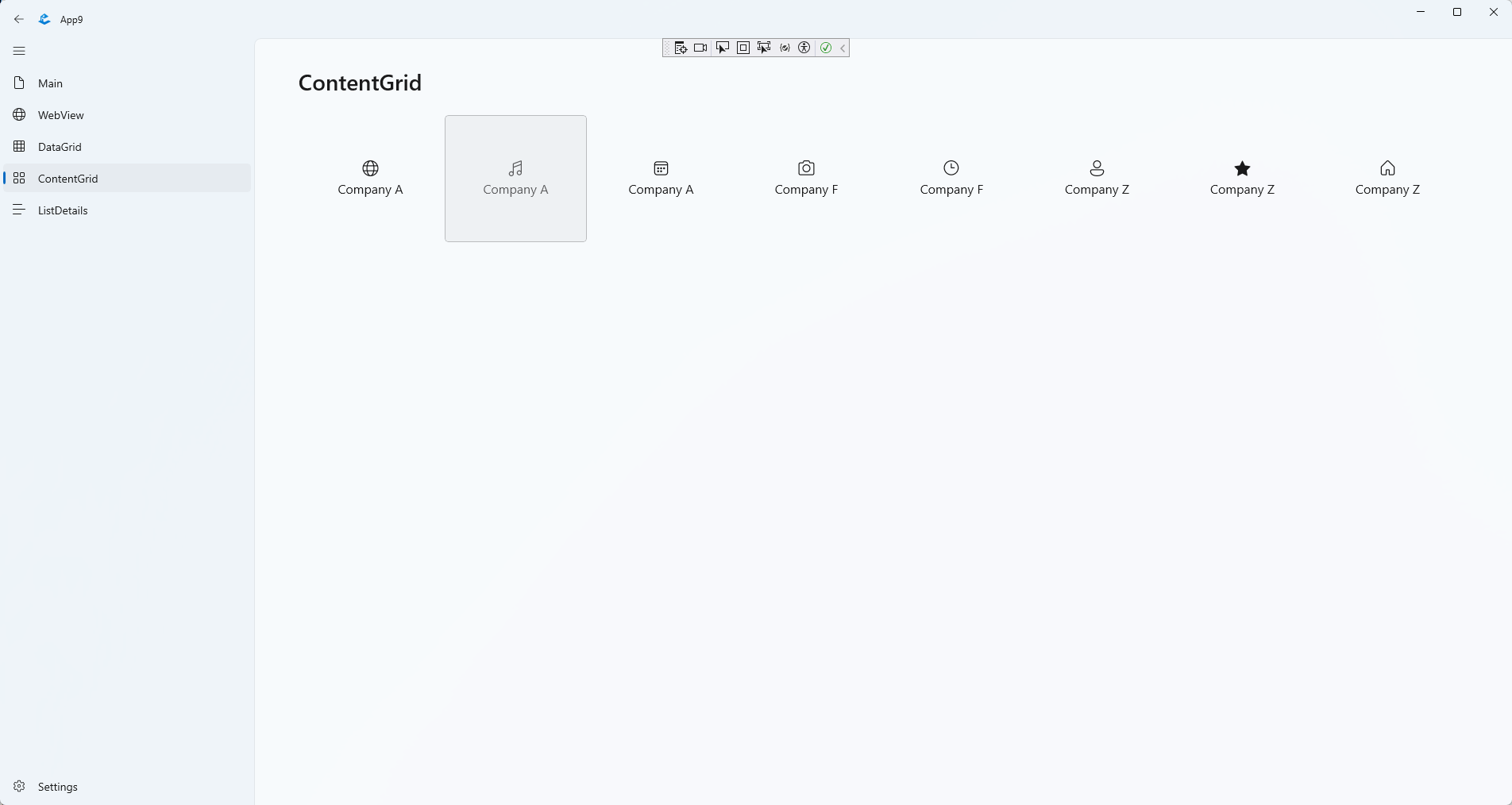

In GreatBlogs – Technik-Umschwung am Frontend habe ich schon Änderungen an der Technologie-Auswahl angekündigt. Ein Teil dessen wurde mittlerweile auch umgesetzt. Schauen wir uns die Änderungen im Detail an.

Feed-Generierung

Der Beitrags-Feed wurde im ersten Schritt mit Vue.js realisiert. Das ist nett, aber im Grunde ergibt das dann doch bei jedem Aufrung eine Query gegen die Datenbank. Nun ist es nicht so relevant, auf Sekundenbasis neue Beiträge tatsächlich auch zu listen. Die Entscheidung, den Feed-Reader zu generieren und statisch auszuliefern, war daher sehr einfach.

Go bietet eine Templating-Engine an, die für diese Zwecke perfekt ist. Also habe ich Templates und Styles entworfen und per Go hole ich die relevanten Daten, jage sie durch die Templating-Engine und fertig ist das Ergebnis.

Da ohnehin alle 30 Minuten per Cronjob die registrierten Feeds aktualisiert wird, wird im Zuge dessen auch die Feed-Seite im Anschluss neu generiert.

Blogger Auflistung

In dieselbe Kerbe schlägt die neue Blogger-Auflistung. Diese zeigt alle registrierten Blogs an. Da sich diese noch seltener ändert, reicht eine Aktualisierung einmal am Tag. Auch dies wird über einen Cronjob durchgeführt.

RSS-Feeds

Wie im Screenshot oben zu sehen ist, werden mittlerweile auch RSS-Feeds angeboten. Hierfür gibt es eine praktische Go-Bibliothek: Gorilla Feeds.

Mit dieser Bibliothek können Atam bzw. RSS 2.0 Feeds sehr einfach erzeugt werden. Die Einbindung von Feeds ist damit ein Kinderspiel.

Hilfsmittel

Es gibt ja neben der technischen Aspekte weitere Themen zu bedienen: Usability, Accessability, Performance und natürlich auch IT-Themen. Das Einrichten und Konfigurieren von Cronjobs oder des Webservers zum Beispiel. Hier ein paar Links zu hilfreichen Webseiten:

Der Beitrag GreatBlogs – Ressourcen-Optimierung, RSS und Co. erschien zuerst auf Norbert Eder.

Go bietet in der Standardlibrary gleich eine Templateengine an. Damit können sowohl beliebige Textdateien, als auch HTML-Dateien per Templates generiert werden. Das ist eine ziemlich coole und hilfreiche Geschichte.

Konkret sprechen wir von den Standard-Libraries html/template und text/template. Für meine Arbeit mit GreatBlogs habe ich html/template verwendet.

Das Templating ist relativ easy. Hier ein Beispiel:

<div class="grid bloggers">

{{ range .Bloggers }}

<div class="card" data-category="{{ .Category }}">

<a href="{{ .URL }}" class="card-title">{{ .Title}}</a><br/>

<div class="card-info">

<span>{{ translate .Category }}</span>

</div>

</div>

{{ end }}

</div>

Dazu das Datenkonstrukt, das an die Templating-Engine übergeben wird:

var feedData = FeedData{

Articles: articles,

Bloggers: bloggers,

BloggersCount: len(bloggers),

WaitingBlogs: count,

GenerationDate: time.Now(),

}

Im obigen Beispiel werden articles, bloggers etc. aus einer Datenbank gelesen und damit die Datenstruktur aufgefüllt. Damit das gesamte Templating funktioniert, kann man diesen Code heranziehen:

templateFilename := path.Base("index.tmpl")

tmpl, err := template.New(templateFilename).ParseFiles(templateFilename)

Hier ist darauf zu achten, dass sowohl für New, als auch für ParseFiles derselbe Dateiname verwendet wird, da es sonst zu einer Ausnahme kommt.

In weiterer Folge ist dann der Generierungsvorgang anzustarten:

var f *os.File

f, err = os.Create("index.html")

checkError(err)

err = tmpl.Execute(f, feedData)

checkError(err)

err = f.Close()

checkError(err)

Der Vollständigkeit halber die Funktion checkError:

func checkError(err error) {

if err != nil {

panic(err)

}

}

Das war es im Grunde auch schon.

Detailliertere Informationen bzw. zusätzliche Funktionalitäten, sind in der Dokumentation der Standardbibliothek zu finden.

Der Beitrag HTML-Dateien mit Go generieren erschien zuerst auf Norbert Eder.

Es tut sich was bei GreatBlogs. Ich hatte ja im Beitrag GreatBlogs – Projekt und Technik geschrieben, dass ich großteils einen neuen Dev-Stack einsetze. Wie das dann so ist, kann sich sehr schnell etwas daran ändern und das wird es auch. Den Grund und noch weitere Themen gibt es in diesem Beitrag.

Statische Seiten brauchen weniger Ressourcen

Er war doch schon nett, der Gedanke, ein neues JavaScript-UI-Framework zu verwenden. Bei genauerer Überlegung, nach etwas Einarbeitungszeit und auch einigen Gesprächen bin ich nun an dem Punkt angelangt, dass ich das nicht brauche.

Vielmehr möchte ich auf möglichst viel JavaScript verzichten. Ein Großteil der Seiten soll auch ohne JavaScript auskommen können. Welche Gründe sprechen dafür?

- Geringerer Ressourcenverbrauch

- Läuft auch bei ausgeschaltenem JavaScript

- Besseres Caching

- Geringere Komplexität

Als Beispiel ist der Artikel-Feed zu nennen. Dieser zeigt, bis wieder neue Artikel verfügbar sind, immer dieselben Informationen an. Sie müssen nicht immer wieder aufs Neue aus der Datenbank geholt werden. Mit einer Templating Engine lässt sich die Feedseite bei neuen Artikeln einmal generieren. Weitere Zugriffe auf die Datenbank sind nicht notwendig. Mit zunehmender Besucheranzahl macht sich das bemerkbar.

Da im Hintergrund in regelmäßigen Abständen eine Artikelabfrage läuft, ist auch ein entsprechender Triggerpunkt vorhanden.

Entsprechend wurde der Feed nun auch umgebaut.

Go bietet bereits in der Standardbibliothek eine Template-Engine an, mit der sehr schnell und einfach HTML-Seiten (aber auch normale Text-Dateien) generiert werden können.

Das Ergebnis läuft ab sofort unter read.greatblogs.org. Noch setzt der Kategorie-Filter auf JavaScript, aber auch dieser wird in Bälde ersetzt.

Die nächsten technischen Schritte?

search.greatblogs.org läuft nun schon noch mit der Vue.js-App, aber auch hier wird es bald zu Änderungen kommen. Und dann geht es langsam aber sicher in Richtung Suchmaschine. Vorher gibt es noch ein paar Features für den Feed, aber dann geht es ans Eingemachte.

Bisherige Learnings?

Von Zeit zu Zeit ist es gut, sich in einen gänzlich neuen Tech-Stack zu werfen. Man lernt so unheimlich viel. Hier ein kurzer Ausschnitt:

- Golang: WebAPI, Templating, Datenbankzugriffe

- Linux Administration: Services, Automatisierung, Metriken, Logging

- PostgreSQL: Administration, Backup

Auch wenn einige Dinge vorher schon bekannt waren: Es ist die Anwendung, die Herausforderung an der man wächst. Ein großes Stichwort ist auch Automatisierung. Das nimmt unheimlich viel (dumpfe) Arbeit ab und hilft zudem Fehler zu vermeiden.

Ich bin mir sicher, da kommt noch Vieles hinzu …

Der Beitrag GreatBlogs – Technik-Umschwung am Frontend erschien zuerst auf Norbert Eder.

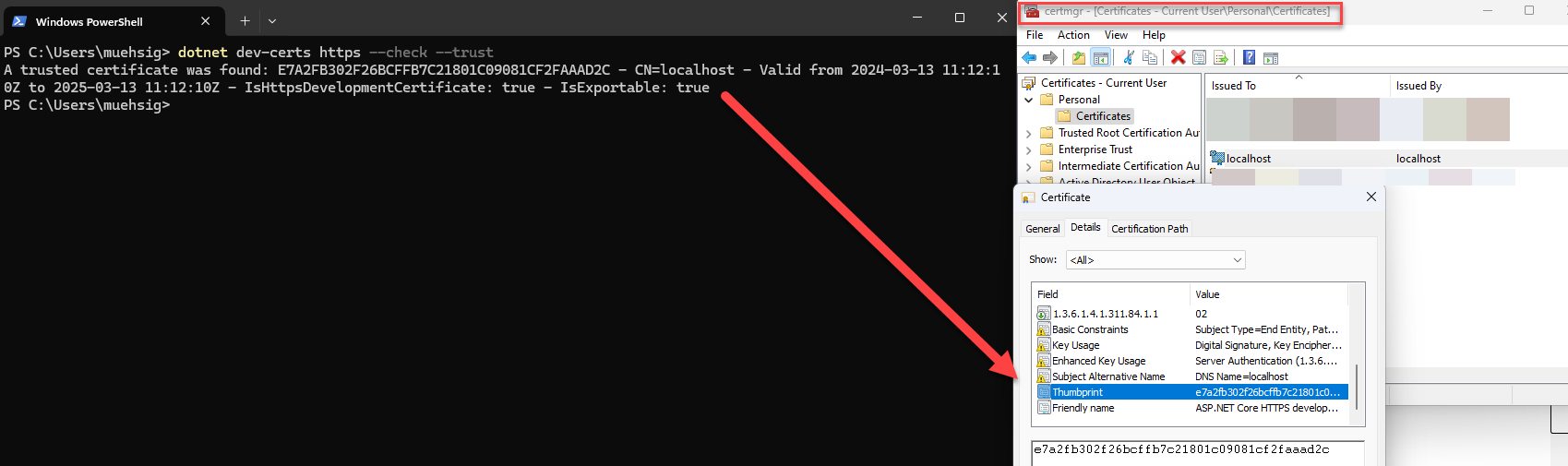

I took over two more roles in the company for a while. Besides being a software engineer, I'm also responsible for sharing knowledge, raising awareness, and supporting projects regarding quality assurance and application security. The latter is the topic for which I'm writing these lines.

Package Vulnerabilities

In Visual Studio, NuGet is checking the referenced packages for vulnerabilities, which is a great new feature. We now see a notification in VS if a referenced or transitive package has vulnerabilities. We can also run a dotnet CLI command to see if a package has vulnerabilities, which is also great. The CLI command can be used in build pipelines to check for vulnerabilities during build time automatically. Awesome.

For a while now, our company has been using a separate tool that scans our repositories during code changes on the main branches and PRs. This tool does not use NuGet but reads all files that have NuGet references, such as project files and other package reference files. It checks the package names and version against CVE Databases for published vulnerabilities and reports them directly to us via Slack notifications. We can also create Jira tickets directly within the tool and assign them to the right project and the right person to solve the problem. Also, this tool is scanning transitive packages, which is cool in general.

Vulnerable Transitive Packages

In the previous section, I mentioned transitive packages two times. These are packages referenced by the packages you are referencing on your projects directly—they are kind of second—or third-level references.

What's the problem with those?

Counter question:

Who would you solve vulnerabilities in that kind of package?

Exactly! That's the problem!

Vulnerabilities in directly referenced packages can be updated really simply. Just update to a patched package version, and the problem is solved, right?

In most cases, CVE databases list vulnerabilities that have already been patched. Otherwise, you would make a vulnerability publicly known that can't be patched, which is dangerous for the user of a vulnerable package. The tool we use and the NuGet audit feature check against CVE databases.

Vulnerabilities that are not listed in those databases cannot be found using those tools. These are called Zero-Day vulnerabilities.

Since transitive packages are not directly referenced, you can't easily increase the version number to a patched version.

We need a solution for it.

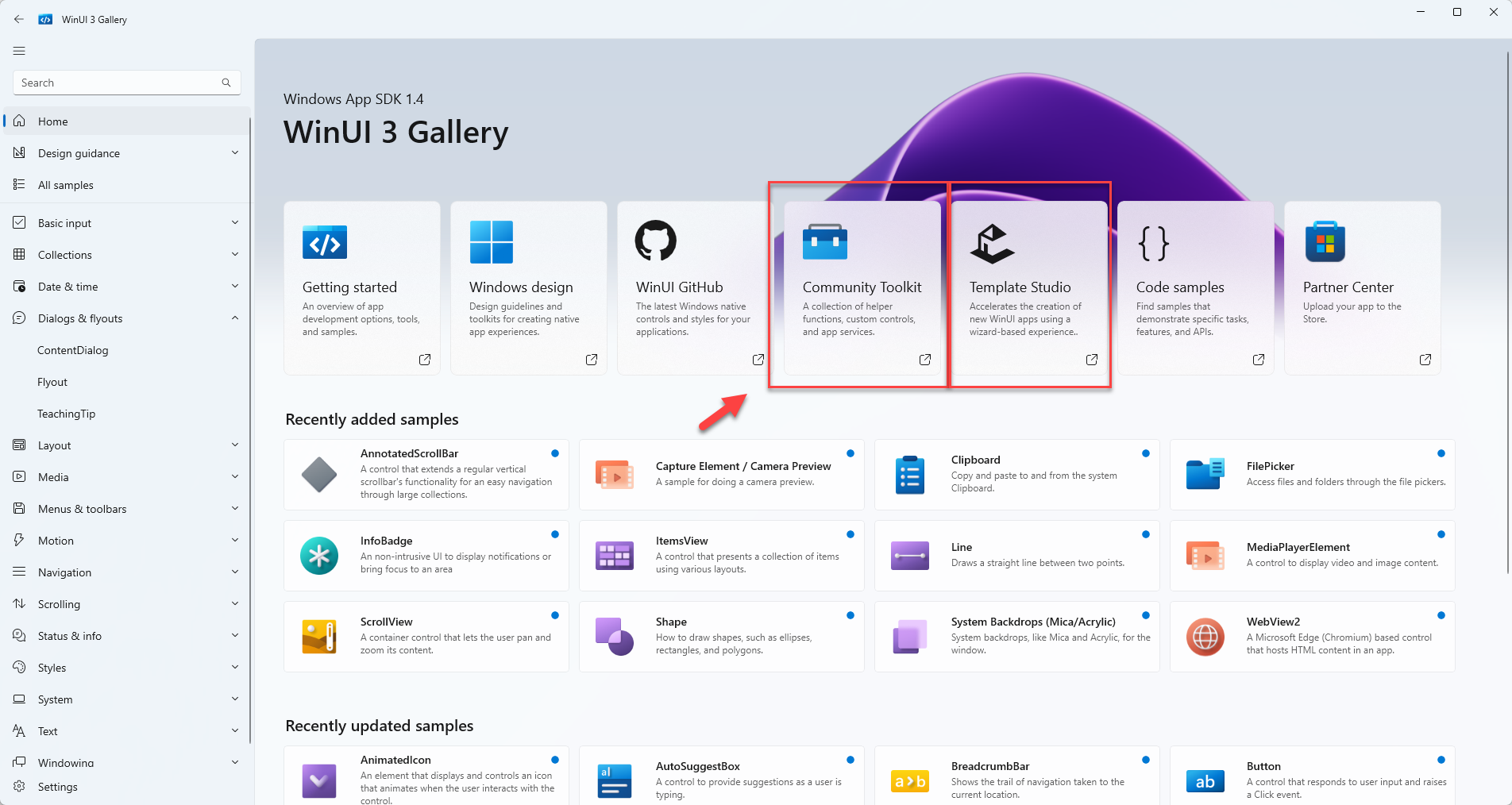

Central Package Management (CPM)

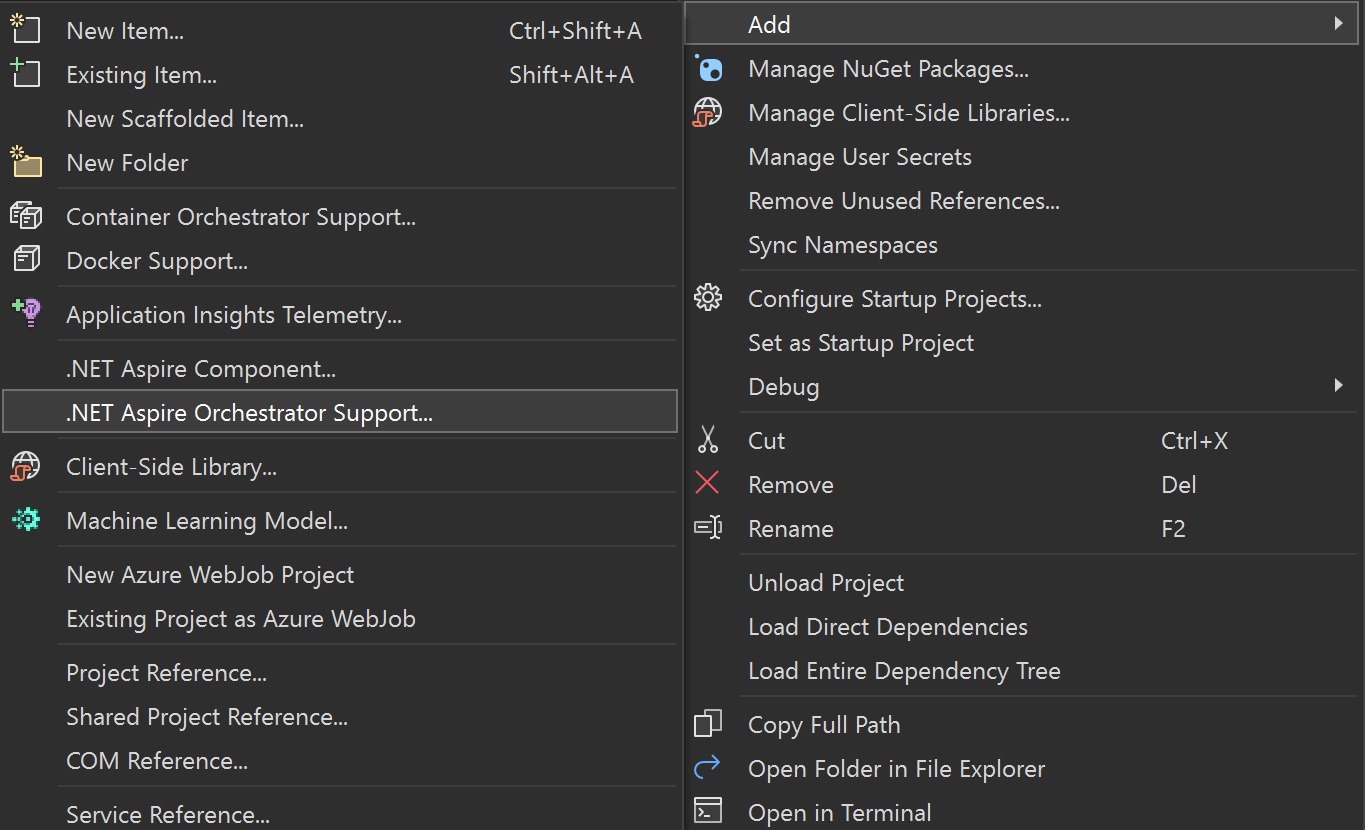

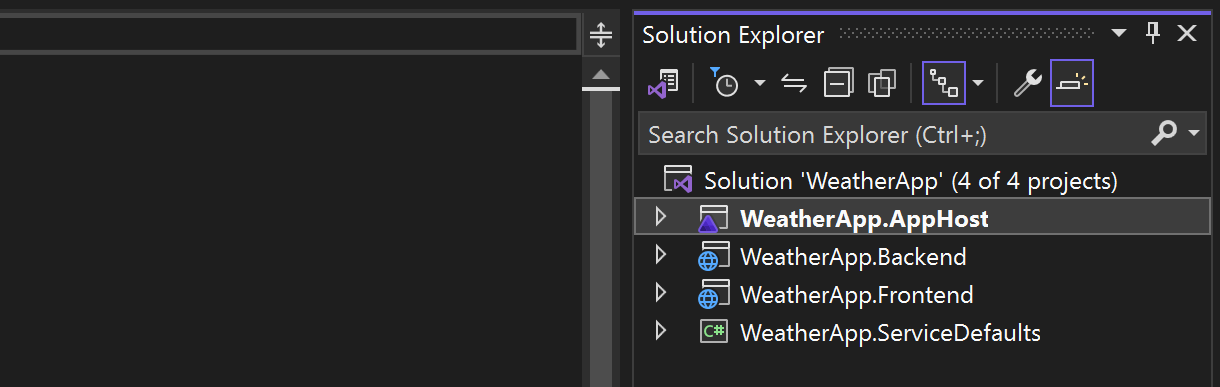

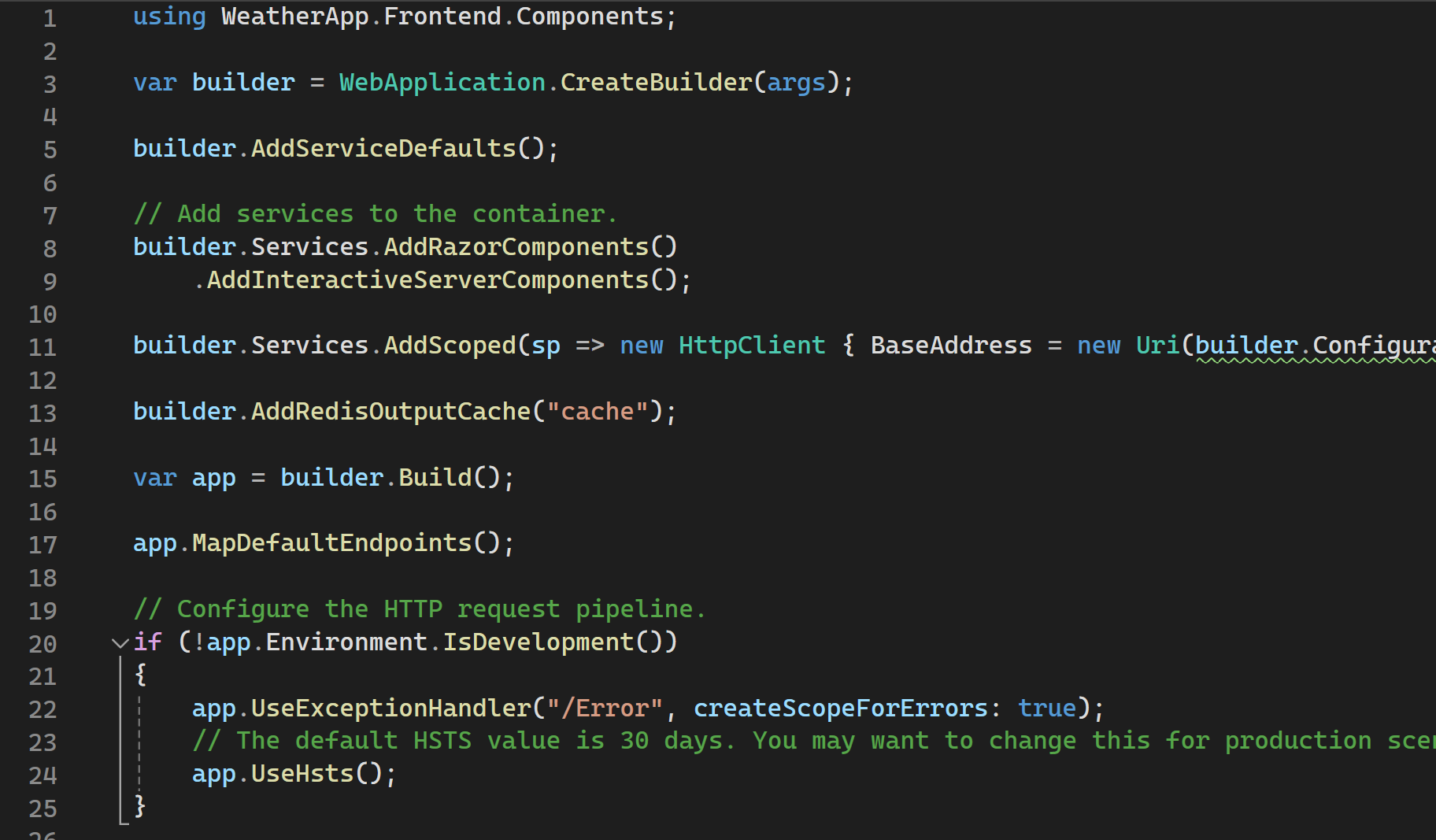

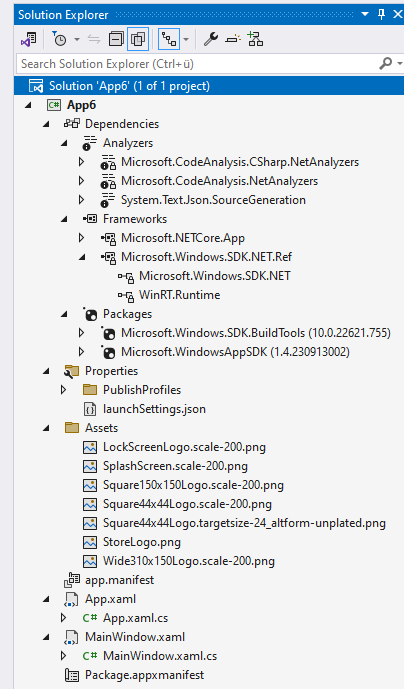

A quick research (=googling) points me to Central Package Management for NuGet. This is a little bit hidden feature in the .NET ecosystem. It is supported by SDK-style projects in VS, VS Code, and the dotnet CLI.

Imagine you can manage your packages and package versions in a central place for all the projects in your solution. This solves several problems:

- All projects use the same package version.

- You can manage the package version in one place.

Projects than reference the packages without a version number. VS supports it, NuGet supports it, and the dotnet CLI supports it. On the other hand, when I wrote it is a kind of hidden feature, I mean it like this. You can't change to CPM in VS. You can't create a file in VS to manage your packages centrally.

CPM is basically yet another XML file called Directoy.Packages.props that need to be located in the same folder as your solution file.

What you can do to create such a file is to create a new XML file and rename it to Directoy.Packages.props, google for the docs, and add the base structure of the XML into the file. Or you can use an easier way using the dotnet CLI to create such a file:

dotnet new packagesprops

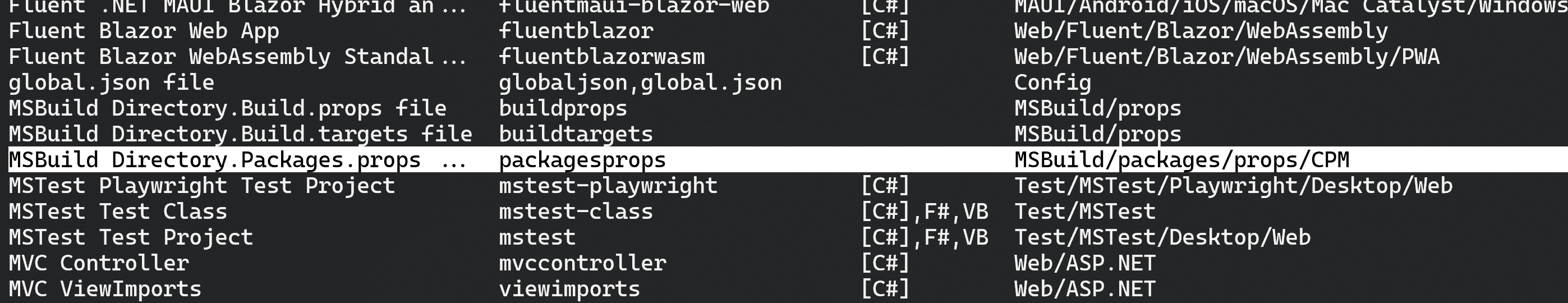

Type dotnet new list to find the file in the list of templates:

This will create a file like this:

<Project>

<PropertyGroup>

<!-- Enable central package management, https://learn.microsoft.com/en-us/nuget/consume-packages/Central-Package-Management -->

<ManagePackageVersionsCentrally>true</ManagePackageVersionsCentrally>

</PropertyGroup>

<ItemGroup>

</ItemGroup>

</Project>

Even if the NuGet explorer supports this file, you will probably need to touch it from time to time. Therefore, I'd propose to add it to a solution folder in VS to have quick access to it while developing. Usually I create a solution folder called _default or _shared to every solution that contains files like this, or the .gitignore or whatever file that is not part of any projects and needs to be edited from time to time.

Now the work starts, and you should add all the packages referenced in your projects within this file without having duplications. Duplications lead to NuGet errors when adding references or at build time.

<Project>

<PropertyGroup>

<ManagePackageVersionsCentrally>true</ManagePackageVersionsCentrally>

</PropertyGroup>

<ItemGroup>

<PackageVersion Include="Aspire.Hosting.AppHost" Version="9.1.0" />

<PackageVersion Include="CodeHollow.FeedReader" Version="1.2.6" />

<PackageVersion Include="coverlet.collector" Version="6.0.4" />

<PackageVersion Include="Duende.IdentityServer" Version="7.2.0" />

<PackageVersion Include="EfCore.SchemaCompare" Version="9.0.0" />

<PackageVersion Include="FluentAssertions" Version="8.2.0" />

<PackageVersion Include="Microsoft.AspNetCore.Authentication.Google" Version="9.0.3" />

<PackageVersion Include="Microsoft.AspNetCore.Authentication.OpenIdConnect" Version="9.0.3" />

<PackageVersion Include="Microsoft.EntityFrameworkCore.Design" Version="9.0.3" />

<PackageVersion Include="Microsoft.EntityFrameworkCore.SqlServer" Version="9.0.3" />

You can do this manually on small projects. Unfortunately, the dotnet CLI or VS does not support automatically converting all NuGet references in a solution to CPM.

As an alternative, I propose to use a dotnet tool called centralisedpackageconverter (more information) that you can install with a single command:

dotnet tool install CentralisedPackageConverter --global

After it's installed, run it with the following command and it does its job:

central-pkg-converter .

What about vulnerabilities in transitive packages?

Transitive Pinning

If you run the previous command with the option -t or --transitive-pinning, it adds an XML tag to the PropertyGroup that I was looking for:

<Project>

<PropertyGroup>

<ManagePackageVersionsCentrally>true</ManagePackageVersionsCentrally>

<CentralPackageTransitivePinningEnabled>true</CentralPackageTransitivePinningEnabled>

</PropertyGroup>

<ItemGroup>

<PackageVersion Include="Aspire.Hosting.AppHost" Version="9.1.0" />

This setting enables transitive pinning with CPM. This allows you to add entries for transitive packages to pin them to a specific version.

For example: You are using the latest version of a package 4.0.0 that references a transitive vulnerable package 3.3.13 that is already patched in version 3.3.14 . You cannot update the direct reference to solve the problem because you have already used the latest version of the directly referenced package. You can add an entry for the transitive package and set it to version 3.3.14. This way, you are pinning that transitive package to a patched later version. This package doesn't need to be referenced in any project. When NuGet tries to solve the transitive references, it finds the entry and loads the patched package.

This will solve the problem with vulnerable transitive packages.

Again, VS and NuGet are supporting this feature in general. You can still use VS and the NuGet package explorer to manage and update your packages. If you reference a new package to a project, it will add the reference without a version number to the project file when it comes to migrating to CPM.

Conclusion

CPM is great for managing your package versions in a central place. Sure, you can do it with the NuGet package explorer on the solution level as well, but it actually sets the package versions on each project file, which will work until a team member updates a package on the project level instead of the solution level. CPM is always on the solution level.

CPM also solves the security problem of vulnerable transitive package references by using transitive pinning, which allows you to update a transitive package reference to a patched version.

Lastly

One last thing to mention: If you really want to use different versions of packages within your solution's projects, you cannot use CPM. CPM and regular NuGet references can't be mixed yet. Managing the package versions centrally really means for all projects in your solution.

Mit GreatBlogs.org habe ich ein kleines, feines privates Projekt gestartet. Ziel wird die Durchsuchbarkeit von privaten Blogs sein. Einfach die vielen tollen Beiträge da draußen, unabhängig von den großen Datenkraken aufzufinden. Ohne Werbung und ohne Daten einzusammeln, um sie dann zu verkaufen. Hier werde ich das Projekt aus der technischen Sicht begleiten.

Ankündigungen (wie die initiale Ankündigung von BlogSearch) finden auf C0D1 Online statt. Hier geht es rein um die technischen Aspekte.

Der Tech-Stack

Für jedes Projekt muss man sich für einen Tech-Stack entscheiden. Oftmals greift man zu dem, das man gut beherrscht um schnell Fortschritte zu machen. Für mich steht aber auch im Vordergrund, Neues zu lernen und eben einen Stack zu verwenden, den ich so noch nicht hatte.

Backend

Ohne viele Worte gleich eine Schnellübersicht:

- Linux

- PostgreSQL

- Golang

- nginx

Seit meinem Umstieg auf Linux muss klarerweise alles auf diesem Betriebssystem laufen. Auch wenn ich vorher schon teilweise plattformunabhängige Entwicklungswerkzeuge eingesetzt habe, gibt es doch hie und da einige Lücken. Aber die Herausforderung liegt nicht in der Programmiersprache oder der Datenbank, sondern bei administrativen Themen auf der Linux-Seite.

Alle paar Jahre legte ich Cronjobs an oder musste ein Service konfigurieren. Aber das war einfach zu selten. De facto muss ich mir alles wieder neu raussuchen und anlernen. Das dauert natürlich, aber man lernt ja schnell und das freudige Gefühl, wenn es dann auch tatsächlich funktioniert ist nachwievor eine schöne Sache.

Für das Web API verwende ich das Gin Web Framework. Es bietet eigentlich alles, was man braucht und ist zudem Middleware-fähig und somit einfach und vielfältig zu konfigurieren.

Auch wenn ich schon einige Kleinigkeiten mit Go gemacht habe, steht eine größere Real-World-Anwendung noch aus. D.h. es gibt auch diesbezüglich einiges zu lernen, vor allem, wenn es dann um diverse Wartungs- und Diagnose-Aufgaben geht.

Frontend

Auf der Clientseite habe ich mich für Vue.js mit Vite entschieden. Einen speziellen Grund hierfür gibt es nicht. Es erscheint mir durchaus ausgereift zu sein, bietet zahlreiche Unterstützungen. Für dieses Projekt wollte ich nicht wieder zu Angular greifen, sondern eine neue Variante kennenlernen.

Ob die Entscheidung gut war, wird sich zeigen.

Wie geht es weiter?

Im ersten Schwung ging es darum, eine erste Infrastruktur aufzusetzen und einen Start zu liefern. Der Start bedeutet Mitmachende Blogs zu finden, um einen guten Datengrundstock für die ersten Features zu liefern.

Einfachere Features werden bald folgen und werden dann durchaus den ersten Nutzen für alle Websitebesuchenden bringen.

Infrastrukturell müssen einige Aufgaben automatisiert werden. Vor allem die Releaseerstellung und das anschließende Deployment. Hier wird dann wohl Docker o.Ä. zum Einsatz kommen.

Danach geht es an die eingemachten Themen einer Suche und dann wird da wohl noch die eine oder andere Technologie hinzukommen.

Der Beitrag GreatBlogs – Projekt und Technik erschien zuerst auf Norbert Eder.

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

Fuslogvw.exe – Wait, What? Ohhh!

This might sound like a blast from the .NET past – but this little tool actually saved our day during a real customer issue.

Here’s the situation: We had a good old .NET Framework app (yes, still alive and kicking!) that worked perfectly everywhere – except on a few mysterious machines. Right on startup: Crash. No clear error, just some DLL failing to resolve its dependencies.

But! The error message did mention something called Fuslogvw.exe (Docs) – a tool I had never actually used before. So let’s take a look.

What does Fuslogvw.exe do?

In short: it logs how assemblies are loaded, where they were found, and – more importantly – where they weren’t.

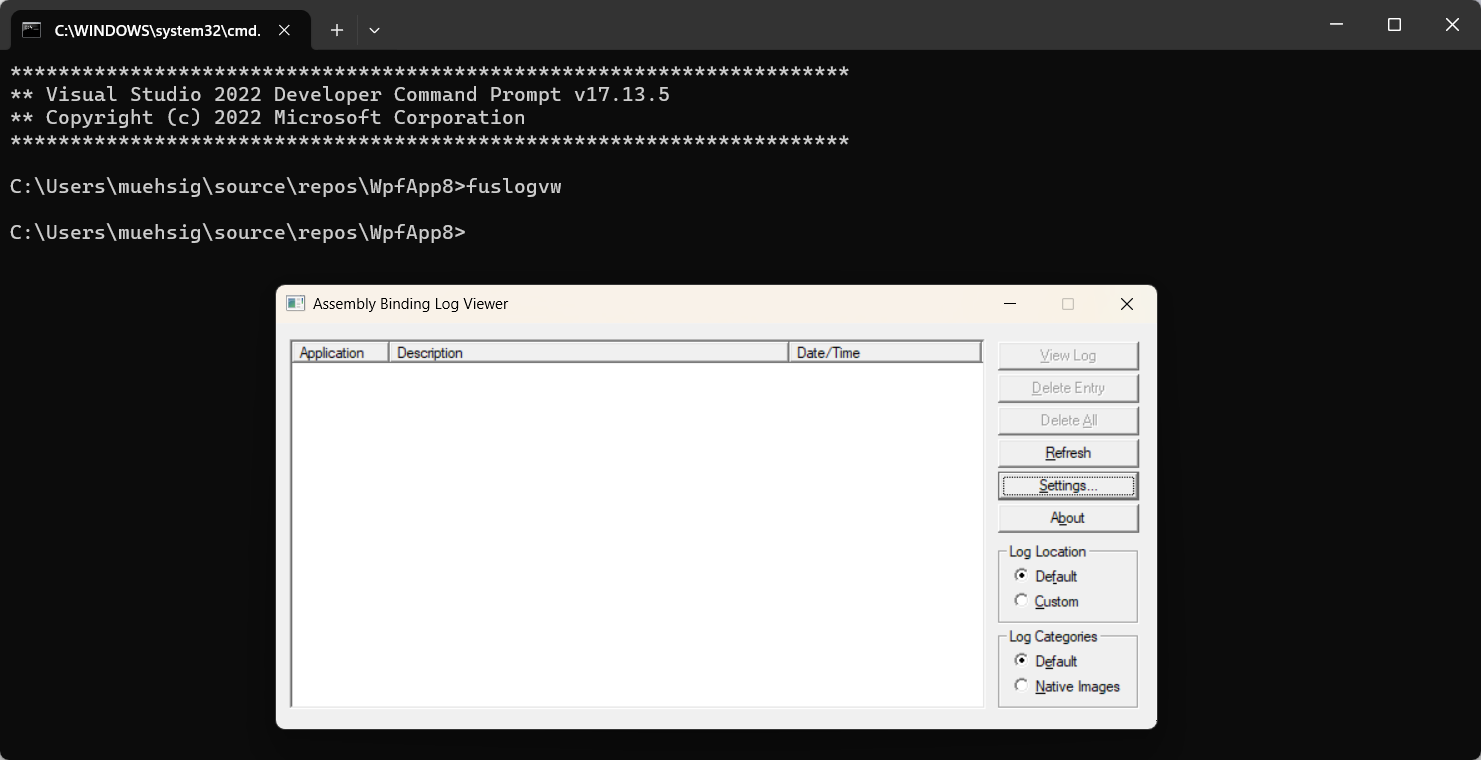

If you have Visual Studio installed, you can start it via the developer prompt and type: fuslogvw.

The tool itself looks like it hasn’t changed since the Windows XP days, but hey – it gets the job done:

At the end, you get handy little reports like this one:

*** Assembly Binder Log Entry (3/5/2007 @ 12:54:20 PM) ***

The operation failed.

Bind result: hr = 0x80070002. The system cannot find the file specified.

Assembly manager loaded from: C:\WINNT\Microsoft.NET\Framework\v2.0.50727\fusion.dll

Running under executable C:\Program Files\Microsoft.NET\FrameworkSDK\Samples\Tutorials\resourcesandlocalization\graphic\cs\graphicfailtest.exe

--- A detailed error log follows.

=== Pre-bind state information ===

LOG: DisplayName = graphicfailtest.resources, Version=0.0.0.0, Culture=en-US, PublicKeyToken=null

(Fully-specified)

LOG: Appbase = C:\Program Files\Microsoft.NET\FrameworkSDK\Samples\Tutorials\resourcesandlocalization\graphic\cs\

LOG: Initial PrivatePath = NULL

LOG: Dynamic Base = NULL

LOG: Cache Base = NULL

LOG: AppName = NULL

Calling assembly : graphicfailtest, Version=0.0.0.0, Culture=neutral, PublicKeyToken=null.

===

LOG: Processing DEVPATH.

LOG: DEVPATH is not set. Falling through to regular bind.

LOG: Policy not being applied to reference at this time (private, custom, partial, or location-based assembly bind).

LOG: Post-policy reference: graphicfailtest.resources, Version=0.0.0.0, Culture=en-US, PublicKeyToken=null

LOG: Attempting download of new URL file:///C:/Program Files/Microsoft.NET/FrameworkSDK/Samples/Tutorials/resourcesandlocalization/graphic/cs/graphicfailtest.resources.DLL.

LOG: Attempting download of new URL file:///C:/Program Files/Microsoft.NET/FrameworkSDK/Samples/Tutorials/resourcesandlocalization/graphic/cs/graphicfailtest.resources/graphicfailtest.resources.DLL.

LOG: Attempting download of new URL file:///C:/Program Files/Microsoft.NET/FrameworkSDK/Samples/Tutorials/resourcesandlocalization/graphic/cs/graphicfailtest.resources.EXE.

LOG: Attempting download of new URL file:///C:/Program Files/Microsoft.NET/FrameworkSDK/Samples/Tutorials/resourcesandlocalization/graphic/cs/graphicfailtest.resources/graphicfailtest.resources.EXE.

LOG: All probing URLs attempted and failed.

And in our case, that was exactly what we needed: One of the machines had an old, incorrect DLL sitting in the Global Assembly Cache (GAC) – something that shouldn’t have been there at all. It got loaded, messed everything up, and was a pain to track down… until Fuslogvw pointed us right to it.

What about .NET Core / modern .NET?

Fuslogvw won’t help you there – things work differently in the new world. But there are other tools like dotnet trace that can help with similar issues.

Hope this helps!

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

Last week I had to upgrade an old SharePoint Framework (SPFX) project, and surprisingly, the process was smoother than expected.

For those unfamiliar, SPFX is a framework for building extensions for SharePoint Online. Here is an example of an SPFX extension: Microsoft Docs.

The upgrade approach I took was a combination of:

- Creating a completely new SPFX project to see the latest project structure.

- Using the M365 CLI to generate a step-by-step upgrade guide.

Overall, it turned out to be a pretty smooth experience and the CLI was quite new for me, and I wanted to document it here.

Hope this helps!

ASP.NET Core Policies

ASP.NET Core policies provide a structured and reusable way to enforce authorization rules across your application.

The built-in features are very flexible, but we had trouble with one scenario - but depending how you write your “Requirements” this might even be possible with the built-in features.

Our approach was to use the authorization service to check certain policies manually - which works quite good!

The Challenge: Combining Policies with OR Logic

In one of our API use cases, we needed to allow access either for certain clients (e.g., specific admininstrative applications) or for certain users in the database. The two approaches differ:

- Client Authorization: This is relatively straightforward and can be handled using the built-in

RequireClaim approach.

- User Authorization: This required checking database permissions, meaning a custom authorization requirement was necessary.

Since both authorization paths are valid, they need to be evaluated using OR logic: if either condition is met, access should be granted.

Solution: Using the Authorization Service for Manual Policy Checks

Instead of relying solely on [Authorize] attributes, we can leverage the IAuthorizationService to manually check policies in our code.

Step 1: Define the Authorization Policies

In Program.cs, we define multiple policies:

builder.Services.AddAuthorization(options =>

{

options.AddPolicy(KnownApiPolicies.AdminApiPolicyForServiceAccount, policy =>

{

policy.RequireClaim("scope", "admin-client");

});

options.AddPolicy(KnownApiPolicies.AdminApiPolicyForLoggedInUserAdministrator, policy =>

{

policy.Requirements.Add(new DbRoleRequirement(Custom.UserAdminInDatabase));

});

});

Step 2: Manually Validate User Authorization

Using IAuthorizationService, we can manually check if the user meets either of the defined policies.

private async Task<AuthorizationResultType> ValidateUserAuthorization()

{

var user = User;

var serviceAccountAuth = await _authorizationService.AuthorizeAsync(user, KnownApiPolicies.AdminApiPolicyForServiceAccount);

if (serviceAccountAuth.Succeeded)

{

return AuthorizationResultType.ServiceAccount;

}

var userAuth = await _authorizationService.AuthorizeAsync(user, KnownApiPolicies.AdminApiPolicyForLoggedInUserAdministrator);

if (userAuth.Succeeded)

{

return AuthorizationResultType.LoggedInUser;

}

return AuthorizationResultType.None;

}

Step 3: Apply the Authorization Logic in the Controller

[HttpGet]

public async Task<ActionResult<...>> GetAsync()

{

var authResult = await ValidateUserAuthorization();

if (authResult == AuthorizationResultType.None)

{

return Forbid(); // Return 403 if unauthorized

}

using var contextScope = authResult switch

{

AuthorizationResultType.ServiceAccount => // ... do something with the result,

AuthorizationResultType.LoggedInUser => // ...,

_ => throw new UnauthorizedAccessException()

};

return Ok(_userService.GetUsers(...));

}

Recap

We use the IAuthorizationService.AuthorizeAsync method to check multiple policies and depending on the outcome, we can handle it depending on our needs.

This approach retains the same overall structure as the “default” policy-based authorization in ASP.NET Core but provides more flexibility by allowing policies to be evaluated dynamically via the service.

Keep in mind (as mentioned at the beginning): This is just one way of handling authorization. As far as we know, it works well without drawbacks while offering the flexibility we need.

General

An Azure Resource Group is more or less one of the first things you need to create under your Azure subscription because most services need to be placed in an Azure Resource Group.

A resource group has a name and a region, and it feels just like a “folder,” but it’s (sadly) more complicated, and I want to showcase this with App Service Plans.

What is an App Service Plan?

If you run a Website/Web Service/Web API on Azure, one option would be Web Apps-Service.

If you are a traditional IIS developer, the “Web Apps-Service” is somewhat like a “Web Site” in IIS.

When you create a brand-new “Web Apps-Service,” you will need to create an “App Service Plan” as well.

The “App Service Plan” is the system that hosts your “Web App-Service.” The “App Service Plan” is also what actually costs you money, and you can host multiple “Web App-Services” under one “App Service Plan.”

All services need to be created in a resource group.

Recap

An “App Service Plan” can host multiple “Web App-Services.” The price is related to the instance count and the actual plan.

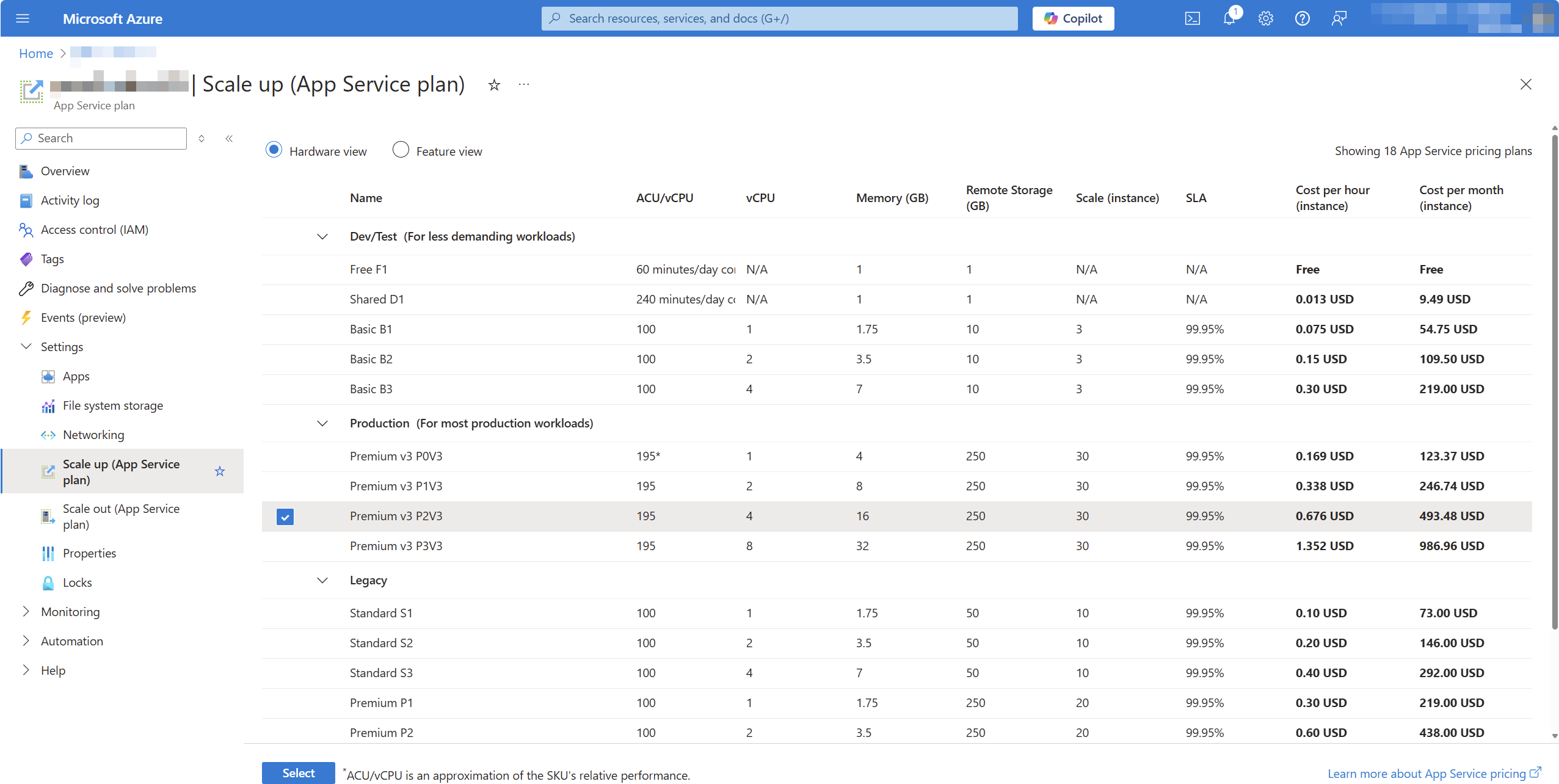

Here is a screenshot from one of our app plans:

So far, so good, right?

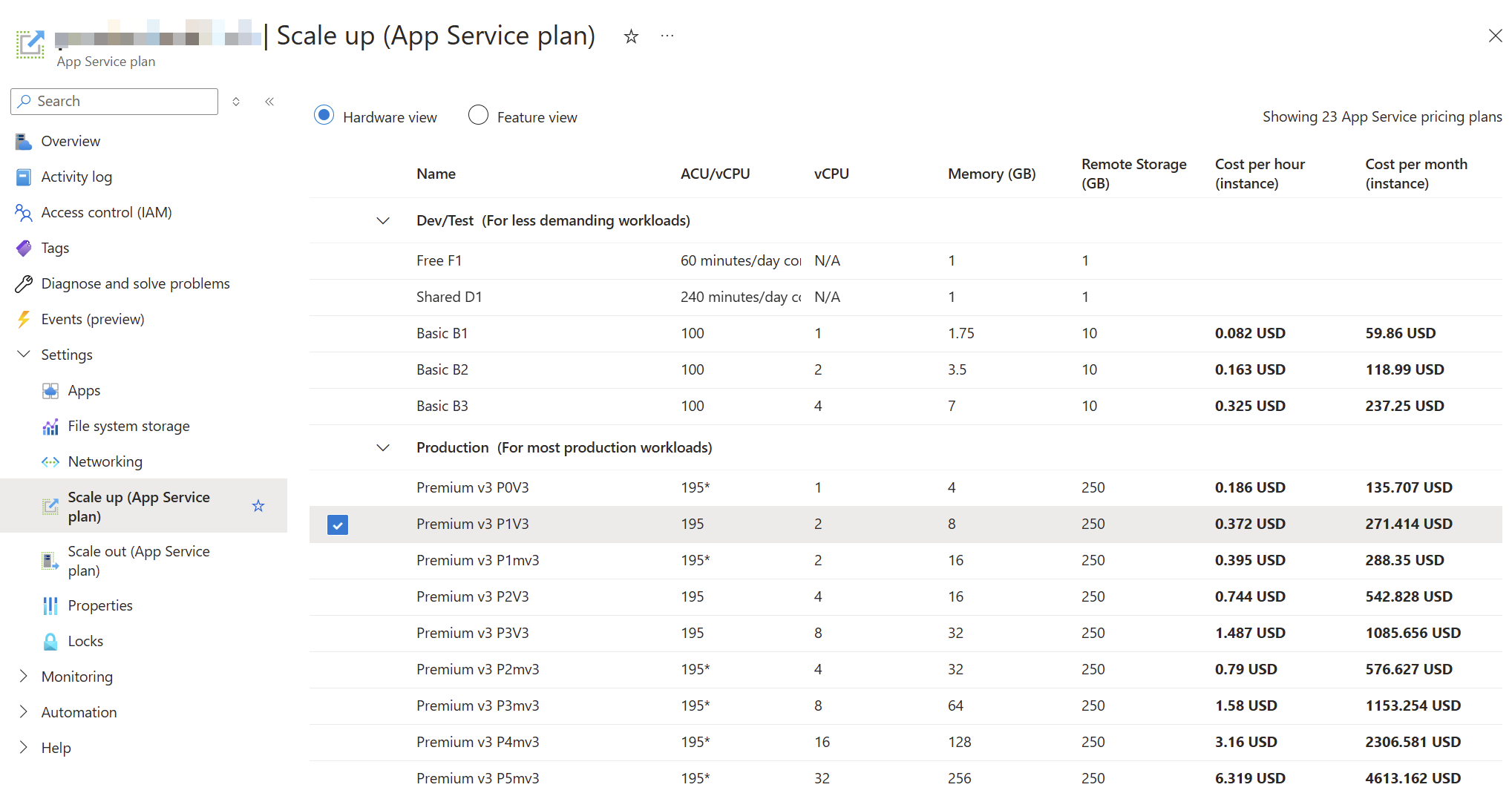

A few months later, we created another resource group in a different region with a new app plan and discovered that there were more plans to choose from:

Especially those memory-optimized plans (“P1mV3” etc.) are interesting for our product.

The problem

So we have two different “App Service Plans” in different resource groups, and one App Service Plan did not show the option for the memory-optimized plans.

This raises a simple question: Why and is there an easy way to fix it?

Things that won’t work

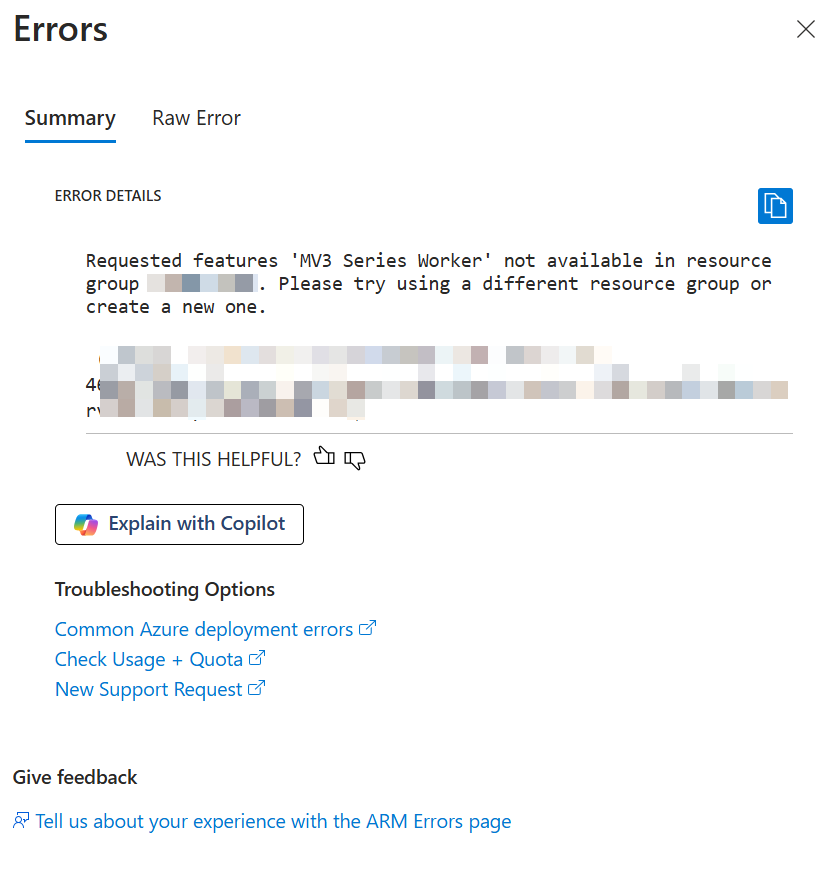

First, I created a new “App Service Plan” within the same resource group as the “old” “App Service Plan,” but this operation failed:

Then I tried to just move the existing “App Service Plan” to a new resource group, but even then, I could not change the SKU to the memory-optimized plan.

The “reason” & solution

After some frustration - since we had existing services and wanted to maintain our structure - I found this documentation site.

Scale up from an unsupported resource group and region combination

If your app runs in an App Service deployment where Premium V3 isn’t available, or if your app runs in a region that currently does not support Premium V3, you need to re-deploy your app to take advantage of Premium V3. Alternatively newer Premium V3 SKUs may not be available, in which case you also need to re-deploy your app to take advantage of newer SKUs within Premium V3. …

It seems the behavior is “as designed,” but I would say that the design is a hassle.

The documentation points out two options for this, but in the end, we will need to create a new app plan and recreate all “Web App-Services” in a new resource group.

Lessons learned?

At first glance, I thought that “resource groups” acted like folders, but underneath—depending on the region, subscription, and existing services within that resource group—some options might not be available.

Bummer, but hey… at least we learned something.

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

A customer inquiry brought the topic of “WinINet” and “WinHTTP” to my attention. This blog post is about finding out what this is all about and how and whether or not these components are related to the HttpClient of the .NET Framework or .NET Core.

General

Both WinINet and WinHTTP are APIs for communication via the HTTP/HTTPS protocol and Windows components. A detailed comparison page can be found here.

WinINet

WinINet is intended in particular for client applications (such as a browser or other applications that communicate via HTTP).

In addition to pure HTTP communication, WinINet also has configuration options for proxies, cookie and cache management.

However, WinINet is not intended for building server-side applications or very scalable applications.

WinHTTP

WinHTTP is responsible for the last use case, which even runs a “kernel module” and is therefore much more performant.

.NET HttpClient

At first glance, it sounds as if the HttpClient should access WinINet from the .NET Framework or .NET Core (or .NET 5, 6, 7, …) - but this is not the case.

Instead:

The .NET Framework relies on WinHTTP. Until .NET Core 2.1, the substructure was also based on WinHTTP.

Since .NET Core 2.1, however, a platform-independent “SocketsHttpHandler” works under the HttpClient by default.

However, the HttpClient can partially read the Proxy settings from the WinINet world.

The “WinHttpHandler” is still available as an option, although the use case for this is unknown to me.

During my research, I noticed this GitHub issue. This issue is about the new SocketsHttpHandler implementation not being able to access the same WinINet features for cache management. The topic is rather theoretical and the issue is already several years old.

Summary

What have we learned now? Microsoft has implemented several Http stacks and in “modern” .NET the HttpClient uses its own handler.

Hope this helps!

Eric Evans published the original book about DDD, “Domain-Driven Design, Tackling Complexity in the Heart of Software, ” in 2004. It took more than 10 years to become a thing and even longer to not be considered “exotic” and “a theoretical but unnecessarily complicated paradigm.”

In 2024, DDD can be considered a commodity in the sense of the word defined in Wardley mapping (at least in Europe). There are conferences with thousands of attendees, new books every year, and tech start-ups looking for engineers with DDD experience or at least awareness. As usual in this young industry full of people packing old ideas in shiny new envelopes and selling them for the big bucks, one question returns: “Is DDD a hype?”.

The picture in the header of this post is from computerhistory.org (thank you). It shows the first Colossus operational at Bletchley Park in 1944. Computers and information technology, in general, have changed since that picture, going from punched cards to cloud computing and AI. The key booster was the Internet, paving the way to “Space, the final frontier… to boldly go where no man (and women and everything in-between) has gone before” (Star Trek: Enterprise).

When I became a software developer in 2003, the usual jobs were automating manual processes, connecting servers, and using the web to communicate with users instead of snail mail and fax. (Ok, in Germany, both are still heavily used  .)

.)

The main goal was to ease the sellers’ jobs and day-to-day lives. SaaS was just defined. Software was used to automate existing manual processes, hence slow processes. Understanding the business domain was not important because the innovation was done on the technical level. Engineers could lay back and let product managers and designers tell you everything they need from a computer to execute —ideally, as an ordered list, in very exact words  .

.

Then, mobile phones became the rulers of our lives. They were more powerful than personal computers, not only because of their RAM and CPU but also because they were portable. They allowed us to take and share pictures and videos, ask for timetables, buy tickets, and look for weather changes while moving. 24 hours a day. The differentiators of a product changed from “how usable” to “how easy to use” and “how fast it evolves”. The colour of a button became irrelevant compared to the whole user experience and the user’s perception of a product.

Today, anyone with a logical brain can learn and write software. Infrastructure is cheap and easily accessible without a substantial up-front investment. Being on-demand, companies can run short-term experiences without significant risks. Understanding tech is no longer a key differentiator. Understanding the User became the key to sustainable market shares.

After the phase of simply automating existing processes followed at work or to achieve a goal, the game has changed. Engineers must not only ensure that the software they create works and is maintainable (readable and extendable) with an acceptable effort, but they also must not ignore the “world outside of bits and bytes” anymore. Product development needs to be collaborative work. Adapting to new market needs, trying new ways, and getting fast feedback are more important than ever. These experiments are not about technology (the users don’t really care if we use MySQL or MongoDB) but about speeding up the users to get their jobs done.

“Are you telling me I can’t build software that solves users’ needs without DDD?! ” That’s a valid remark; I am not. Developers have always written software to solve someone’s goals, even pet projects.

The need for collaboration changes the rules of the game. It changes how we work, understand the underlying problem, and decide what to build (and what not). During collaborative discovery, the user leaves the desks of the product managers and designers and joins our commonly maintained miro boards. DDD enables us to represent the domain understanding in our software and organisation. The strategic design of DDD gives us options for the future without knowing what the future will look like. The tactical patterns give us strong weapons against continuously deteriorating software. DDD unveils how useless questions like “How small is a micro-service?” are. It eliminates the (again) useless answer “It depends”. The answer depends on the context. When asked in a digital context, the answer must always be put in the context of the user’s needs and the socio-technical organisation. It does not depend on the mood of the consultant.

Unlike the usual cases, when an old solution is hyped again in a refurbished version, DDD is not a paradigm invented, forgotten and resurrected after years. It is a paradigm for solving problems in a way that has always been valid, but until a few years ago, it has not been considered important enough. It has needed years of businesses wasting money, engineers feeling the pain while handling “big bulls of mud” and anger because “the requirements have changed” and the technical decisions of the past became a heavy blocker instead of an enabler.

Event storming with domain experts

Event storming with domain experts

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

A customer notified us that our product was slowing down their Microsoft Office installation at startup.

Everything on our side seemed fine, but sometimes Office took 10–15 seconds to start.

After some research, I stumbled upon this: Performance analyzer for Microsoft Defender Antivirus.

The best part about this application is how easy it is to use (as long as you have a prompt with admin privileges). Simply run this PowerShell command:

New-MpPerformanceRecording -RecordTo recording.etl

This will start the recording session. After that, launch the program you want to analyze (e.g., Microsoft Office). When you’re done, press Enter to stop the recording.

The generated recording.etl file can be complex to read and understand. However, there’s another command to extract the “Top X” scans, which makes the data way more readable.

Use this command to generate a CSV file containing the top 1,000 files scanned by Defender during that time:

(Get-MpPerformanceReport -Path .\recording.etl -Topscans 1000).TopScans | Export-CSV -Path .\recording.csv -Encoding UTF8 -NoTypeInformation

Using this tool, we discovered that Microsoft Defender was scanning all our assemblies, which was causing Office to start so slowly.

Now you know: If you ever suspect that Microsoft Defender is slowing down your application, just check the logs.

Note: After this discovery, the customer adjusted their Defender settings, and everything worked as expected.

Hope this helps!

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

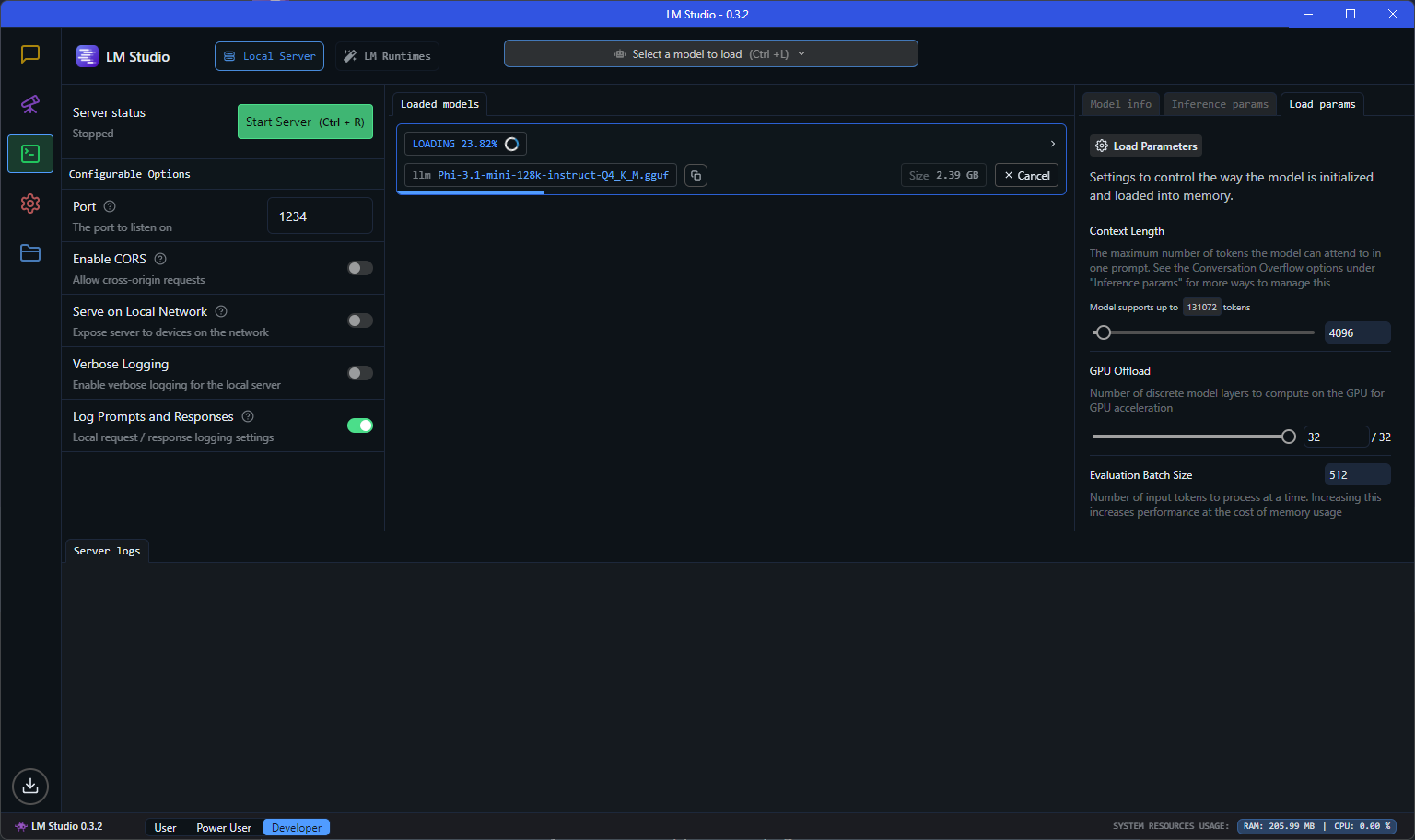

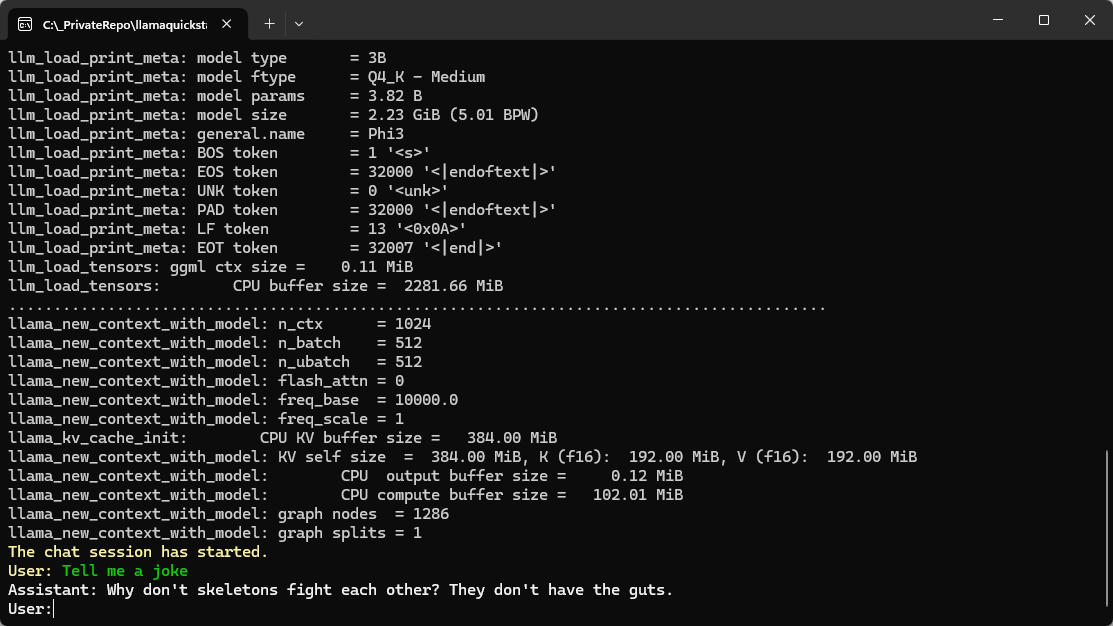

I had the opportunity to attend the .NET User Group Dresden at the beginning of September for the exciting topic “Using Open Source LLMs” and learned a couple of things.

How to choose an LLM?

There are tons of LLMs (= Large Language Models) that can be used, but which one should we choose? There is no general answer to that - of course - but there is a Chatbot Arena Leaderboard, which measures the “cleverness” between those models. Be aware of the license of each model.

There is also a HuggingChat, where you can pick some models and experiment with them.

For your first steps on your local hardware: Phi3 does a good job and is not a huge model.

LM Studio

Ok, you have a model and an idea, but how to play with it on your local machine?

The best tool for such a job is: LM Studio.

The most interesting part was (and this was “new” to me), that you run those local models in an local, OpenAI compatible (!!!) server.

OpenAI Compatible server?!

If you want to experiment with a lightweight model on your system and interact with it, then it is super handy, if you can use the standard OpenAI client and just run against your local “OpenAI”-like server.

Just start the server, use the localhost endpoint and you can use a code like this:

using OpenAI.Chat;

using System.ClientModel;

ChatClient client = new(model: "model", "key",

new OpenAI.OpenAIClientOptions()

{ Endpoint = new Uri("http://localhost:1234/v1") });

ChatCompletion chatCompletion = client.CompleteChat(

[

new UserChatMessage("Say 'this is a test.'"),

]);

Console.WriteLine(chatCompletion.Content[0].Text);

The model and the key don’t seem to matter that much (or at least I worked on my machine). The localhost:1234 service is hosted by LM Studio on my machine. The actual model can be configured in LM Studio and there is a huge choice available.

Even streaming is supported:

AsyncCollectionResult<StreamingChatCompletionUpdate> updates

= client.CompleteChatStreamingAsync("Write a short story about a pirate.");

Console.WriteLine($"[ASSISTANT]:");

await foreach (StreamingChatCompletionUpdate update in updates)

{

foreach (ChatMessageContentPart updatePart in update.ContentUpdate)

{

Console.Write(updatePart.Text);

}

}

Ollama

The obvious next question is: How can I run my own LLM on my own server? LM Studio works fine, but it’s just a development tool.

One answer could be: Ollama, which can run large language models and has a compatibility to the OpenAI API.

Is there an Ollama for .NET devs?

Ollama looks cool, but I was hoping to find an “OpenAI compatible .NET facade”. I already played with LLamaSharp, but LLamaSharp doesn’t offer currently a WebApi, but there are some ideas around.

My friend Gregor Biswanger released OllamaApiFacade, which looks promising, but at least it doesn’t offer a real OpenAI compatible .NET facade, but maybe this will be added in the future.

Acknowledgment

Thanks to the .NET User Group for hosting the meetup, and a special thanks to my good friend Oliver Guhr, who was also the speaker!

Hope this helps!

We recently switched from .NET 6 to .NET 8 and encountered the following Entity Framework Core error:

Microsoft.Data.SqlClient.SqlException: 'Incorrect syntax near the keyword 'WITH'....

The EF code uses the Contains method as shown below:

var names = new[] { "Blog1", "Blog2" };

var blogs = await context.Blogs

.Where(b => names.Contains(b.Name))

.ToArrayAsync();

Before .NET 8 this would result in the following Sql statement:

SELECT [b].[Id], [b].[Name]

FROM [Blogs] AS [b]

WHERE [b].[Name] IN (N'Blog1', N'Blog2')

… and with .NET 8 it uses the OPENJSON function, which is not supported on older versions like SQL Server 2014 or if the compatibility level is below 130 (!)